Back to Basics

Intro to Deep Learning : MLPs

TensorFlow & Deep Learning SG

Part 1 of 3

Deep Learning

MeetUp Group

- Next "Real" Meet-Up ~ one month

-

- Likely to be research-orientated

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Running since 2017 : Over 4500 members!

"Back to Basics"

- This is a three-part introductory series :

-

- Appropriate for Beginners!

- Code-along is helpful for everyone!

- This week : MLPs (fundamentals)

- Next week : CNNs (for vision)

- Third week : Transformers (for text)

Plan of Action

- Each part has 2 segments :

-

- Talk = Mostly Martin

- Code-along = Mostly Sam

- Ask questions at any time!

Today's Talk

- (Housekeeping)

- Short intro to "AI"

- Programming vs Machine Learning vs Deep Learning

- Simple example with handwaving

- Concrete example(s) with code

-

- Fire up your browser for code-along

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- GDE ML; TF&DL co-organiser

- Red Dragon AI...

About Red Dragon AI

- Google Partner : Deep Learning Consulting & Prototyping

- SGInnovate/Govt : Education / Training

- Research : NeurIPS / EMNLP / NAACL

- Products :

-

- Conversational Computing

- Natural Voice Generation - multiple languages

- Knowledgebase interaction & reasoning

Deep Learning Applications

Programming "2.0"

- Programming

- Machine Learning

- Deep Learning

Programming

- Give the computer explicit instructions

- Languages like:

-

- C++

- Javascript

- Python

- ...

- Results ~ Human Ingenuity (1970+)

Traditional Machine Learning

- Make the computer learn from data

-

- Origins in "Statistics"

- Frameworks like:

-

- "R"

- Python + SciKitLearn

- ... Big Data ...

- Results ~ Data size + Features (1990+)

Deep Learning

- Make the computer learn from data

-

- ... where more 'intuition' is required

- Frameworks like:

-

- TensorFlow Keras

- PyTorch

- ...

- Results ~ Data size + Research (2012+)

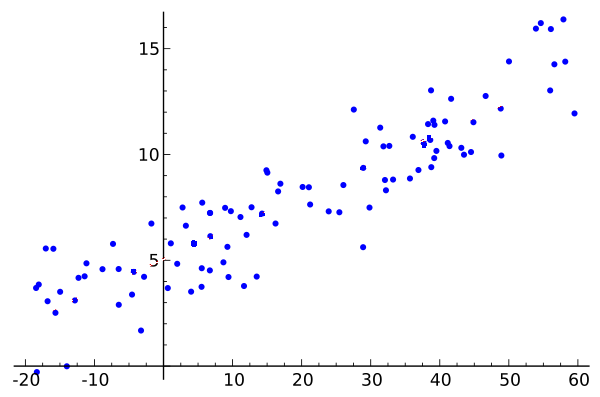

Simple Dataset

(blue dots = data)

One output ← One input

$y = f(x)$

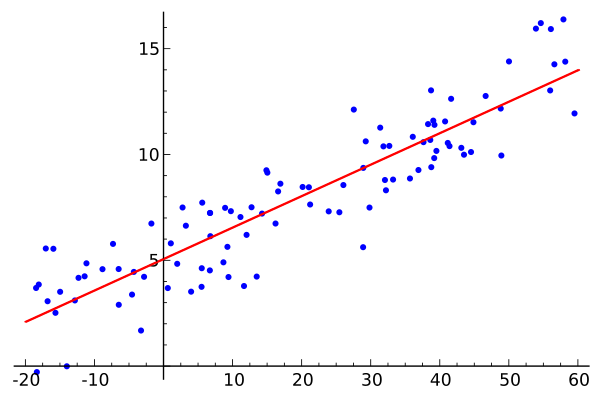

Traditional Machine Learning

(red line = best fit)

Can solve for 'slope' and 'intercept' exactly

$y = mx + b$

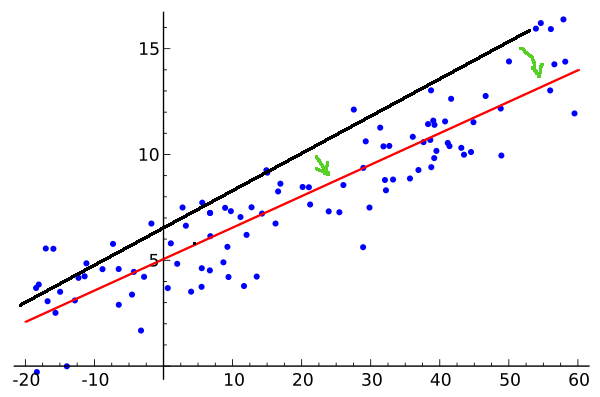

Deep Learning

(red line = better-and-better fit)

Try to search for parameters $w$ and $b$ iteratively

$y = wx + b$

Why do this iteratively?

- Traditional ML techniques:

-

- Restrict relationships $x$ → $y$

- Limit 'Goodness of fit' measures

- Want 'features' from experts

- Deep Learning:

-

- Can solve more intricate problems

- Allow more flexible 'fit' measures

- Given enough data, can create own features

Deep Learning Key Ingredients

- Lots of Data

- Frameworks / construction kits that :

-

- Have useful components

- Can differentiate (maths)

-

- Allows iterations to move parameters ...

- ... in helpful directions

- Lots of Compute:

-

- GPUs (Graphical Processing Units)

- TPUs (Tensor Processing Units)

Deep Learning Key Steps

- Get some compute: Google Colab is our friend

- Get some data: Use simple example dataset today

- Build a model:

-

- Guess at what kind of 'layers' will be useful

- ... a bit of an art

- Randomly initialise the model parameters (!)

- Work out the 'amount of error':

-

- ... and figure out which direction improves it

- Optimise the model:

-

- ... by taking small parameter steps

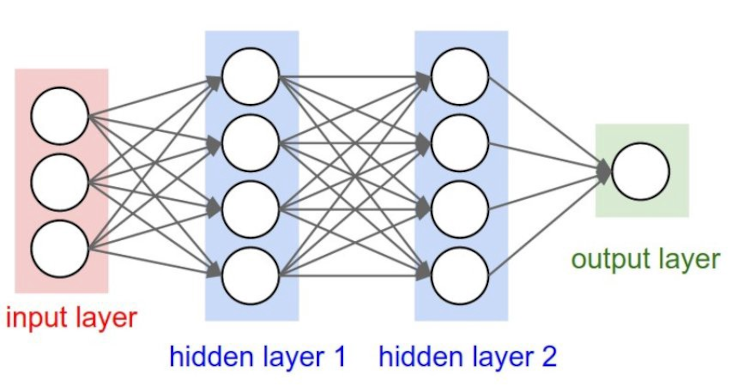

Model Building

- Today = the Basics:

-

InputLayer = image 'flattened out'LinearLayer : $y = wx + b$, akaDenseLayer : $\textbf{y} = \textbf{W}.\textbf{x} + \textbf{b}$OutputLayer = the end output

- But this is just a linear regression!

Model Building pt2

- Multiple Layers :

-

- Can pile up

Denselayers - But interleave 'non-linear' activation functions

-

- ... allows more complex interactions

- Can pile up

- Framework can 'trace' through the model

-

- (so that it knows error-fixing-direction)

- Optimiser can then ...

-

- ... nudge all the parameters to improve model

Model Layers

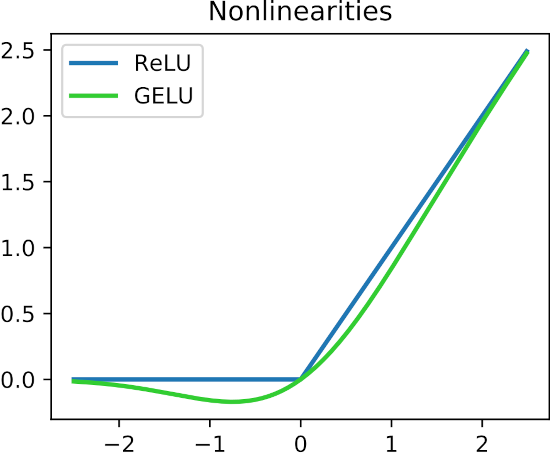

Non-Linear activation

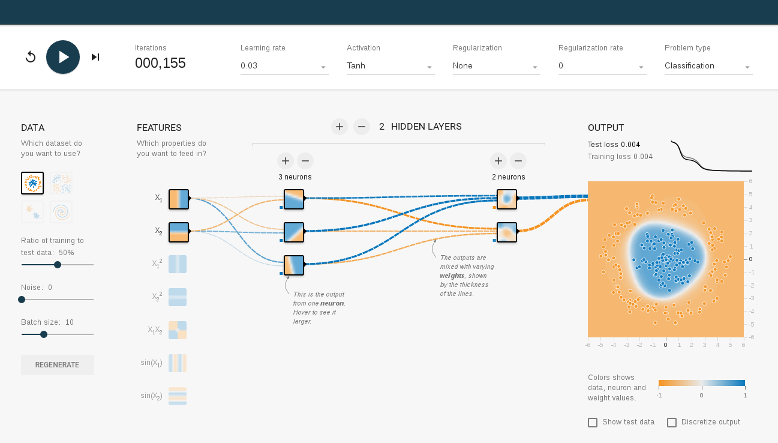

TensorFlow Playground

http://playground.tensorflow.org/

What's Going On?

- Task : Colour the regions to capture the points

- Dataset : Choose 1 of the 4 given

- Input : Sample points

- Output : Colour of each point

- First Layer : Pre-selected features

- Training : Play button

- Loss Measure : Graph

- Epoch : 1 run through all training data

Model Building pt3

- ( just ahead of the code-along )

- If we need Multi-way classification:

-

- Output a score for each class - ideally, probabilities

- Use a

SoftMaxLayer

- Target for classification:

-

- Instead of "Cat", "Dog", "Bird" (or 0, 1, 2)

- Use : [1,0,0], [0,1,0], [0,0,1]

-

- (called a One Hot Encoding)

- Error measure for classification:

categorical_cross_entropy

Summary so far

- We're about to do hands-on Deep Learning!

- In the code-along you will:

-

- Use Colab with its free GPU

- Grab a dataset

- Build a model - using

Dense,ReLUandSoftMax - Measure the model error

- Iteratively fit the model to the data

- ... by taking small optimiser steps

- End result : Achieving first steps in Deep Learning

- Code-Along! -

Further Study

- Field is growing very rapidly

- Lots of different things can be done

- Easy to find novel methods / applications

Deep Learning Foundations

- 3 week-days + online content

- Play with real models & Pick-a-Project

- Funding, Certificates, etc

- Dates : 26, 27 and 30 July

https://www.sginnovate.com/

talent-development

Foundations of DL

Foundations of DL

Vision (Advanced)

Advanced Computer Vision with Deep Learning

- Advanced classification

- Generative Models (Style transfer)

- Deconvolution (Super-resolution)

- U-Nets (Segmentation)

- Object Detection

- 2 day course

Sequences (Advanced)

Advanced NLP and Temporal Sequence Processing

- Named Entity Recognition

- Q&A systems

- seq2seq

- Neural Machine Translation

- Attention mechanisms

- Attention-is-all-You-Need

- 3 day course : Dates TBA @ SGInnovate

Unsupervised methods

- Clustering & Anomaly detection

- Latent spaces & Contrastive Learning

- Autoencoders, VAEs, etc

- GANs (WGAN, Condition-GAN, CycleGAN)

- Reinforcement Learning

- 2 day course

Real World Apps

Building Real World A.I. Applications

- DIY : node-server + redis-queue + python-ml

- TensorFlow Serving

- TensorFlow Lite + CoreML

- Model distillation

- ++

- 3 day course : Dates TBA @ SGInnovate

Deep Learning

MeetUp Group

- Next Regular Meeting ~ 1 month

-

- Hosted by Google

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- QUESTIONS -

Martin @ RedDragon . ai

Sam @ RedDragon . ai

My blog : http://mdda.net/

GitHub : mdda