Embed All The Things

TensorFlow & Deep Learning SG

18 December 2018

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & GDE ML; TF&DL co-organiser

- & Papers...

- & Dev Course...

About Red Dragon AI

- Google Partner : Deep Learning Consulting & Prototyping

- SGInnovate/Govt : Education / Training

- Products :

-

- Conversational Computing

- Natural Voice Generation - multiple languages

- Knowledgebase interaction & reasoning

Outline

whoami= DONE- Word Embeddings

- Other Sequence Embeddings

- Other Object Embeddings

- Multi-Object Embeddings

- Wrap-up

Word Embeddings

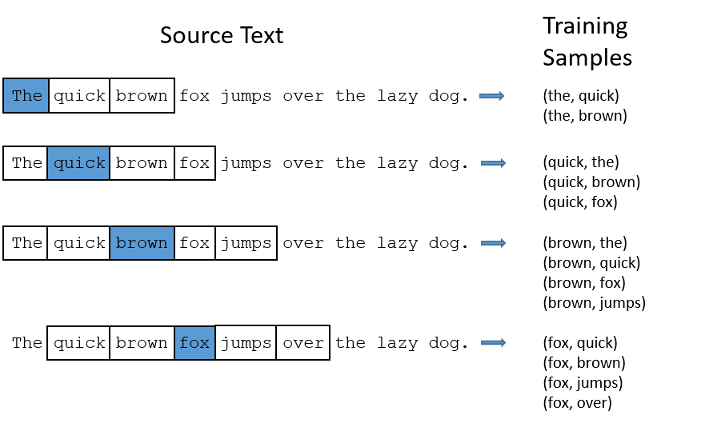

- Major advance in ~ 2013

- Words that are nearby in the text should have similar representations

- Assign a vector (~300-d) to each word

-

- Slide a 'window' over the text (1Bn words?)

- Word vectors are nudged around to minimise surprise

- Keep iterating until 'good enough'

- The vector-space of words self-organizes...

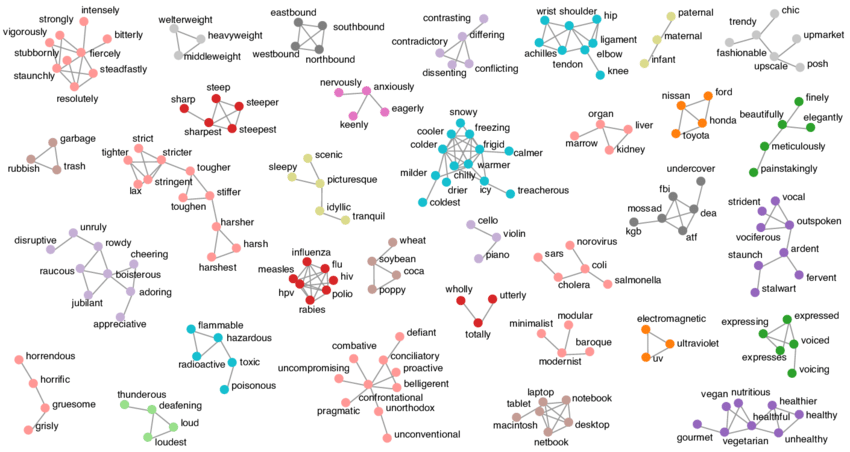

Word Embedding

Word Embedding

- Example 'neighbourhoods' in 300-d space

(eg: word2vec or GloVe)

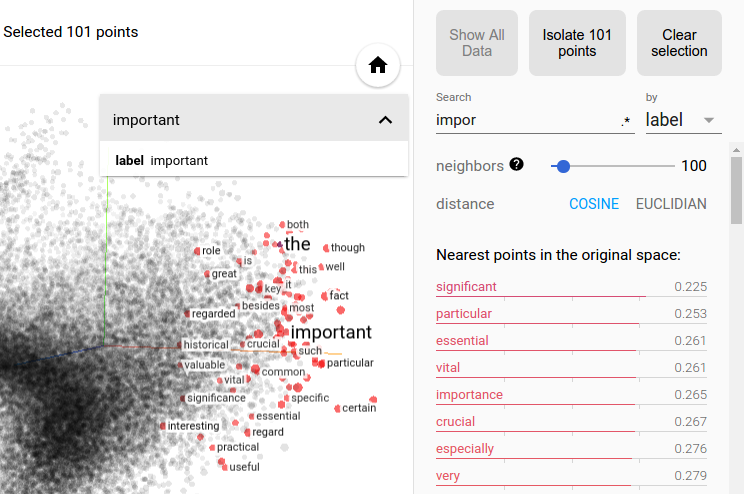

Embedding Visualisation

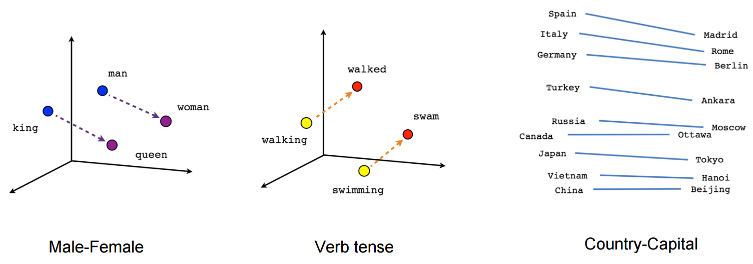

Embedding Geometry

Not clear why this works...

Sequence Embeddings

- Assign a vector (~50-d, initially random) to each token

-

- Slide a 'window' over each sequence of tokens

- Token vectors are nudged around to minimise surprise

- Keep iterating until 'good enough'

- The vector-space of tokens self-organizes

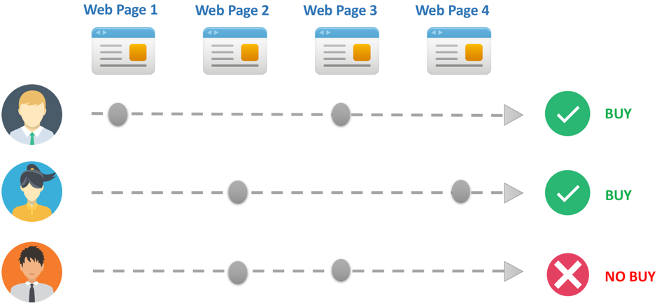

Applications

- Convert 'customer stories' into mini-sentences

- Train an embedding for the story steps

- Insights from embeddings...

Something non-text?

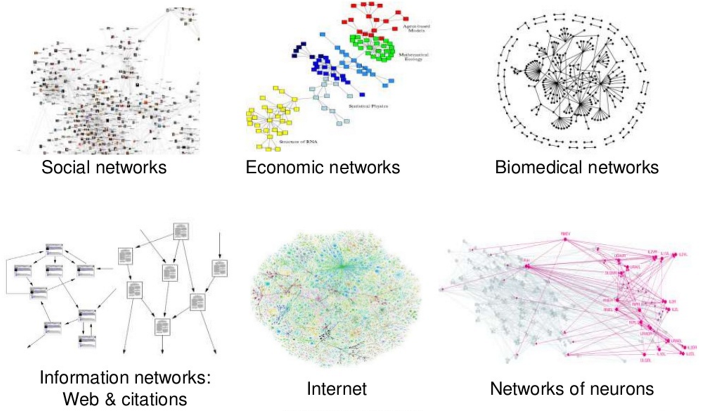

Graphs are everywhere!

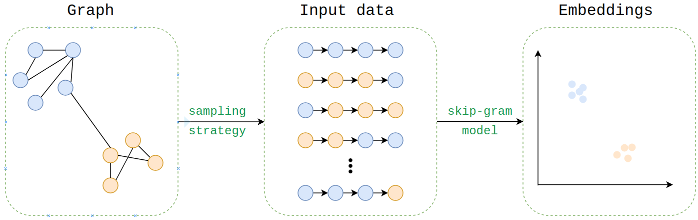

Graph Embedding

- Assign a vector (~50-d, initially random) to each node

-

- Generate random paths along edges

- Do Embedding on manufactured sequences

- Keep iterating until 'good enough'

- Node representations self-organize

Graph Embedding

(eg: node2vec)

Applications

- Link prediction :

-

- Facebook : Nodes are users, links are Friendships

- arXiv : Collaboration

- Biology : Protein-protein interactions

- Hands-on 'footballers' example...

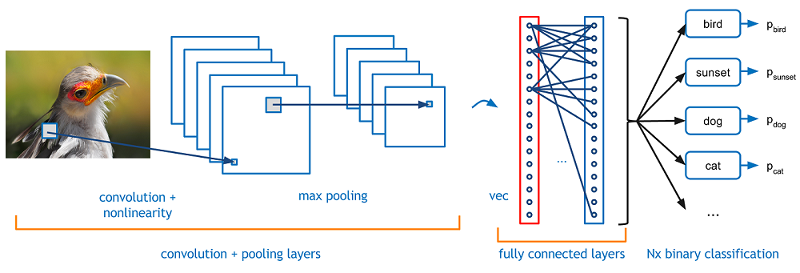

How about images?

Processing an Image

Embedding Images

- Calculate the representation from a pretrained CNN ...

-

- Just ignore the last layer(s)

- Take the output, and normalise it a bit

- ... this probably works straight away

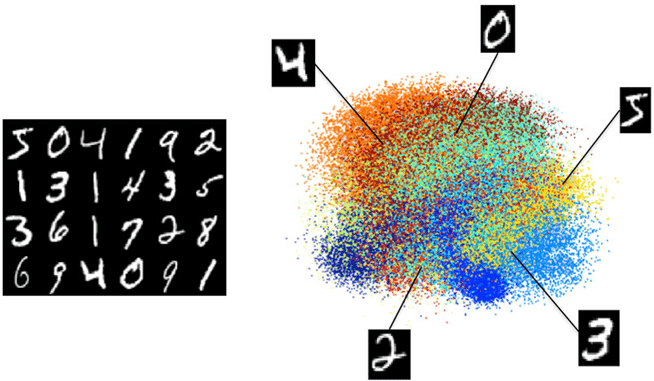

MNIST embedding

Clustering is same thing as neighbourhoods...

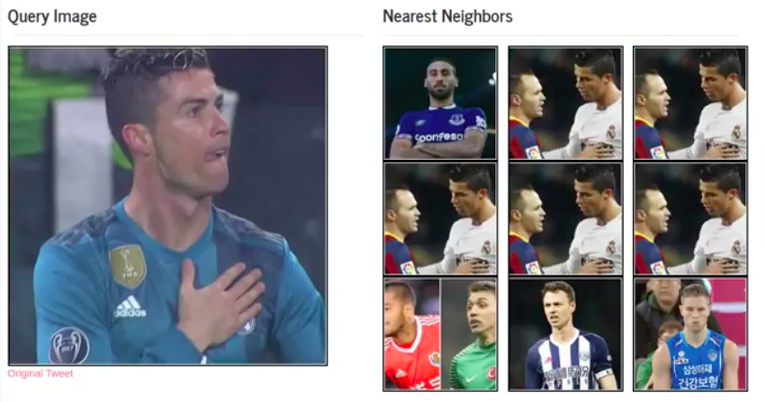

Applications

Image search

Multiple things?

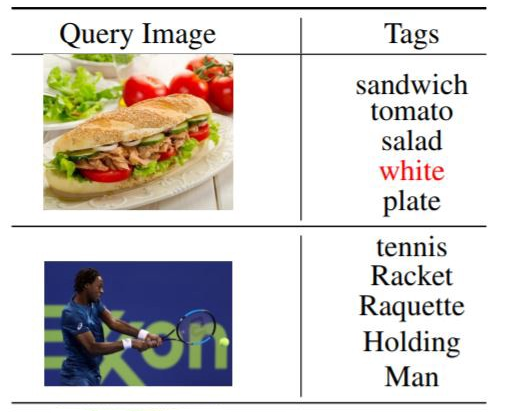

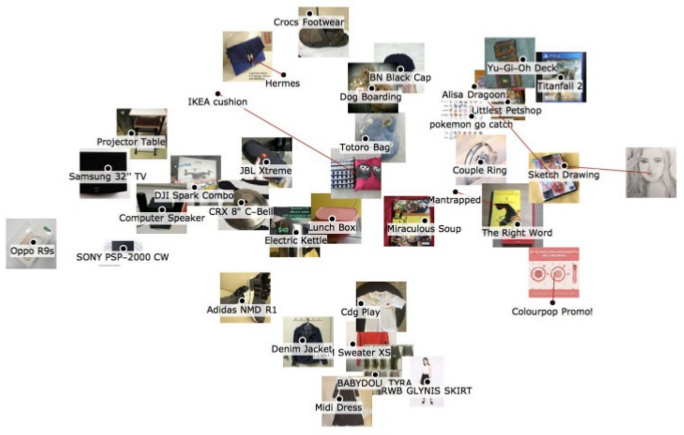

Images with tags

Embedding Two Types...

- Add 'transformer matrices' :

-

- 'P' matrix projects CNN representation of image

- 'Q' matrix projects word embedding

- ... fix up P and Q to align them

- 'Latent Space' self organises...

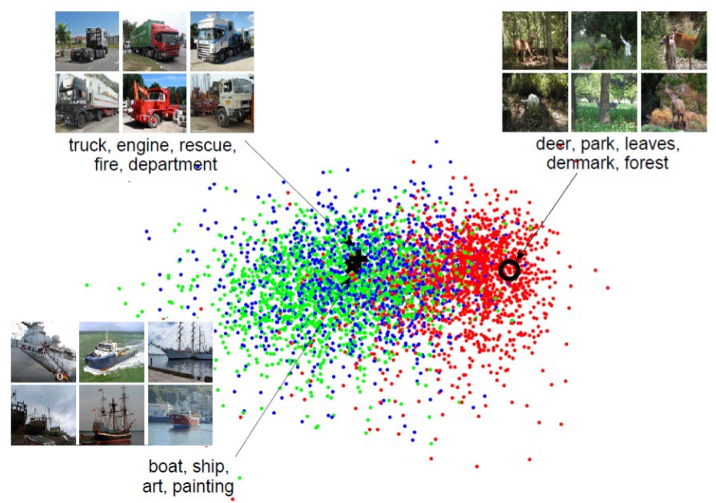

Common Embedding Space

Both 'modalities' map into same 'space'

Applications

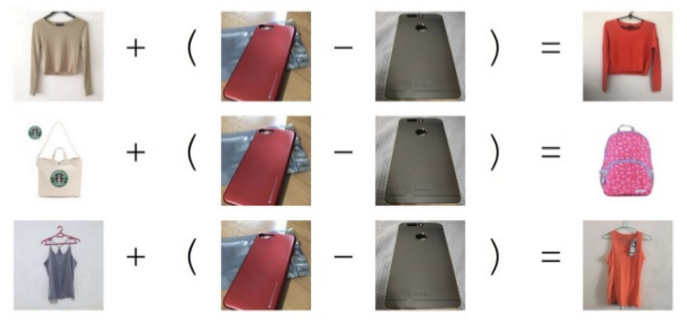

Includes 'Geometry'...

Once again, magical relationships appear!

Wrap-up

- Word/NLP embeddings are fundamental

- Embeddings apply far beyond words

- Embed All The Things!

Deep Learning

MeetUp Group

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Next Meeting :

-

- ?-Jan, hosted at Google ("NeurIPS special")

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

- NB : >3250 Members !!

Deep Learning : Jump-Start Workshop

- First part of Full Developer Course

- Dates : Jan 28+29 + online

-

- 2 week-days + online content

- Play with real models & Pick-a-Project

- Regroup on subsequent week-night(s)

- Cost is heavily subsidised for SC/PR!

- SGInnovate - Talent - Talent Development -

Deep Learning Developer Series

Deep Learning

Developer Course

- Module #1 : JumpStart (see previous slide)

- Each 'module' will include :

-

- Instruction

- Individual Projects

- 70%-100% funding via IMDA for SG/PR

- Module #2 : 31-Jan + 1-Feb : Advanced Computer Vision

- Module #3 : 14-15 March : Advanced NLP

- Location : SGInnovate/BASH

RedDragon AI

Intern Hunt

- Opportunity to do Deep Learning all day

- Work on something cutting-edge

- Location : Singapore

- Status : SG/PR FTW

- Need to coordinate timing...

- QUESTIONS -

Martin @

RedDragon . AI

My blog : http://blog.mdda.net/

GitHub : mdda