Intrinsic

Dimension

PyTorch & Deep Learning SG

15 May 2018

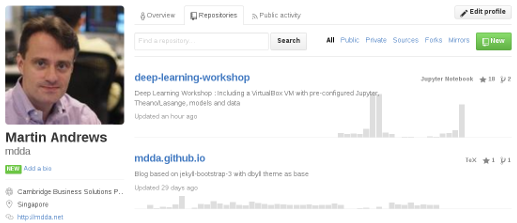

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & Papers...

- & Dev Course...

About Red Dragon AI

- Deep Learning Consulting & Prototyping

- Education / Training

- Products :

-

- Conversational Computing

- Natural Voice Generation - multiple languages

- Knowledgebase interaction & reasoning

Uber Research

- Lots of explanatory material :

Bonus Video

Clickable Link

Overall Goal

- Want to quantify :

-

- Data 'difficulty'

- Model 'relative effectiveness'

- ... value of model structure

- Ideally : How many parameters are required for data?

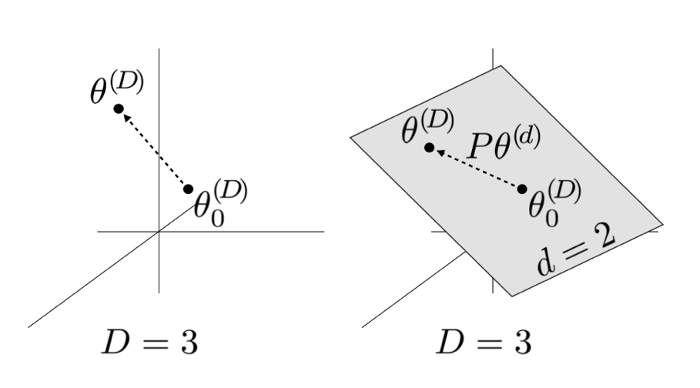

Big idea

- Today's models have many parameters : 'D' dimensional

- Probably, there are many free parameters :

-

- Many of these parameters are redundant

- So the 'solution(s)' are a space, not a point

- Restrict search to lower dimension 'd'

- Build 'D' parameters by random projection

- Intrinsic Dimension = lowest 'd' that 'solves' problem

Parameter Search

Implementation

- Create a model

- Start with regular random initialisation

- Search along lower dimensional 'ray'

- Can use backprop as normal

- If performance is >90% of optimal : Accept

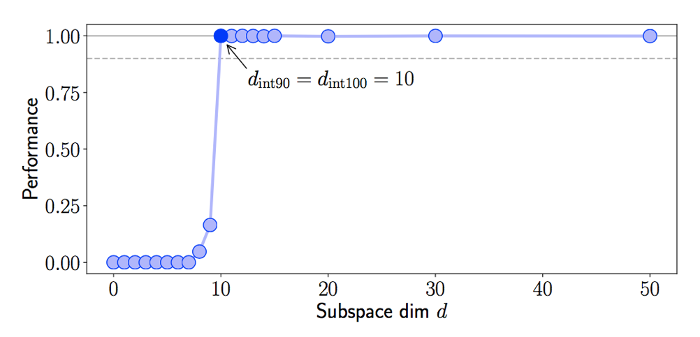

Intrisic Dimension

Hunt for the critical value (idealised)

Random Projections

Underlying Details

- Known to be ~ distance-preserving

- Unlikely to be co-linear

- Matrix can be :

-

- Dense, and stored

- Sparse (still likely to be non-colinear)

- Other, easily computed forms

- Possibly created on-the-fly

Code

- Independent implementation :

-

- Framework = PyTorch

- On GitHub as a Notebook

- Advantages over official TensorFlow version

https://github.com/

mdda/deep-learning-workshop/

notebooks/work-in-progress/IntrinsicDimension.ipynb

Load Directly into Colab

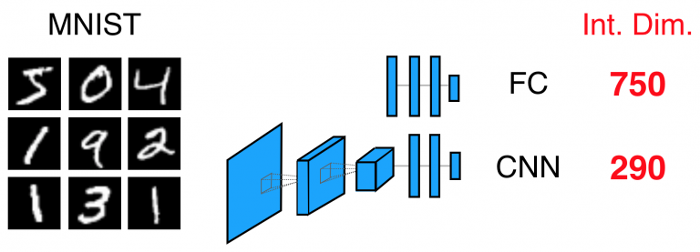

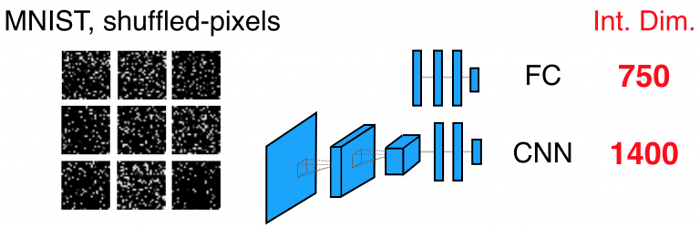

MNIST

Fully Connected vs CNN

Shows that 'visual invariance' is a win

Shuffled MNIST

Fully Connected vs CNN

FC is identical, CNN is of negative value

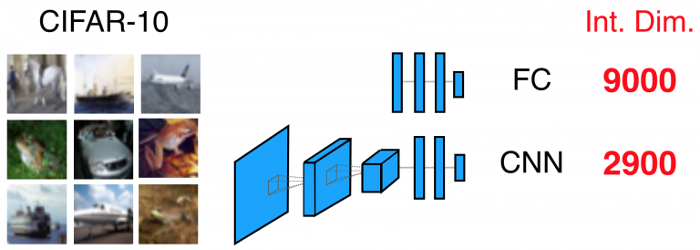

CIFAR-10

Fully Connected vs CNN

CIFAR-10 is about '10 times harder' than MNIST

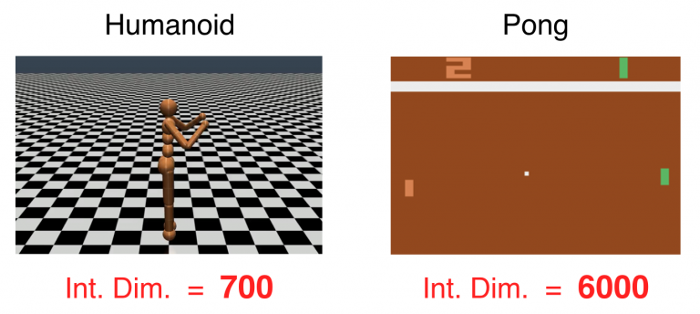

Reinforcement Learning

Can measure different problem spaces

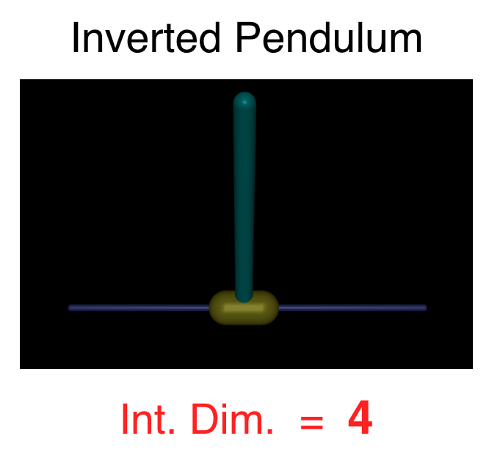

Toy RL Problem

Surprisingly easy...

Wrap-up

- This is a very simple idea

- Real research still possible with MNIST

- Having a free GPU is VERY helpful

Deep Learning

MeetUp Group

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Next Meetings :

-

- 17-May-2018 : Hosted by Google

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

Deep Learning : Jump-Start Workshop

- Dates + Cost : End-June, S$600

-

- Full day (week-end)

- Play with real models

- Get inspired!

- Pick-a-Project to do at home

- 1-on-1 support online

- Regroup on subsequent week-nights

Deep Learning

Developer Course

- Plan : Advanced modules in September/October

- JumpStart module is ~ prerequisite

- Each 'module' will include :

-

- Instruction

- Individual Projects

- Support by SG govt (planned)

- Location : SGInnovate

- Status : TBA

- QUESTIONS -

Martin @

RedDragon.ai

My blog : http://mdda.net/

GitHub : mdda