Deep Learning Workshop

FOSSASIA 2018

Learn to Learn to Learn

25 March 2018

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & Papers...

- & Dev Course...

About Red Dragon AI

- Deep Learning Consulting & Prototyping

- Education / Training

- Products :

-

- Conversational Computing

- Natural Voice Generation - multiple languages

- Knowledgebase interaction & reasoning

Learning to

Learn to Learn

- The basic ideas of Learning

- Learning from a lot of data

- Learning from some data

- Learning from a little data

NB: If you have VirtualBox : Install the 'OVA'

Workshop : Neurons and Features

-

- Go to the Javascript Example : TensorFlow

(or search online for TensorFlow Playground)

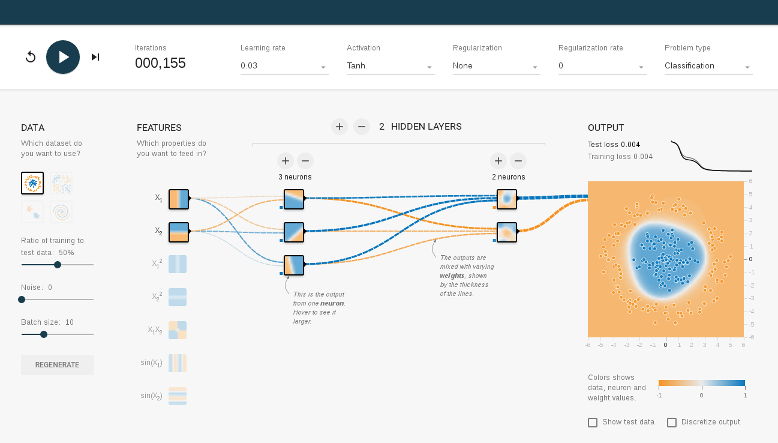

TensorFlow Playground

Things to Understand

- Hands-on :

-

- Goal : learning to predict regions

- Input features

- What a single neuron can learn

- The blame game

- How deep networks 'create' features

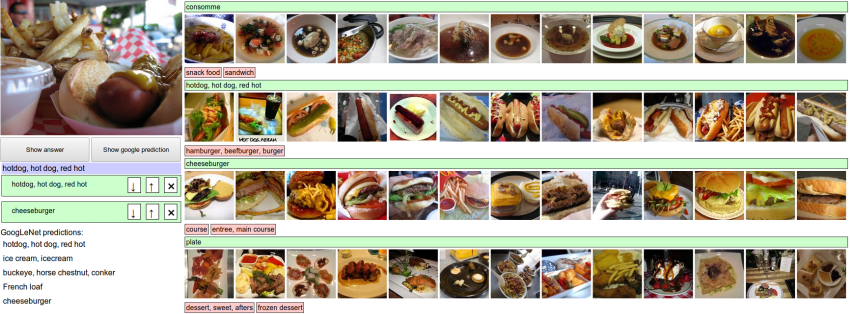

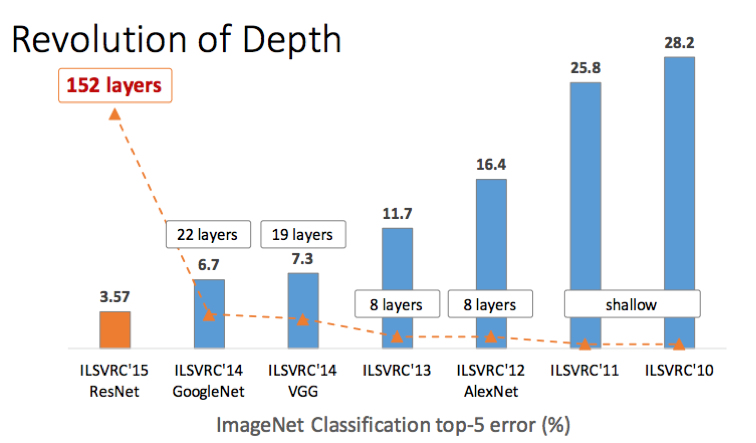

Image Competition

- ImageNet aka ILSVRC

- over 15 million labeled high-resolution images...

- ... in over 22,000 categories

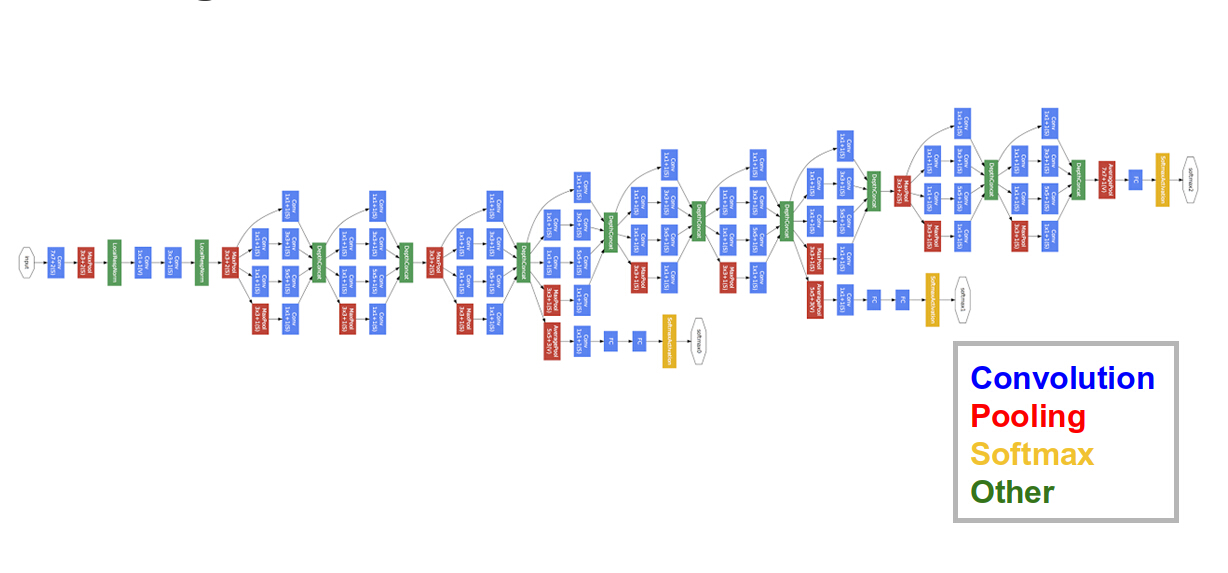

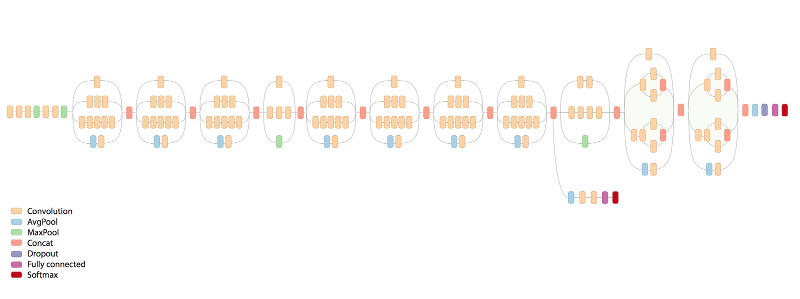

More Complex Networks

GoogLeNet (2014)

... Even More Complex

Google Inception-v3 (2015)

... and Deeper

Microsoft ResNet (2015)

Workshop : VirtualBox

- Import Appliance '

fossasia ... .OVA' - Start the Virtual Machine...

Workshop : Jupyter

- On your 'host' machine

- Go to

http://localhost:8080/

VM : SSH

From your 'host' machine :

ssh -p 8282 user@localhost

VM : Console

Login: user

Password: password

#...

./run-jupyter.bash

#....

Workshop : TensorBoard

- On your 'host' machine

- Go to

http://localhost:8081/

Hands-On : ImageNet

2-CNN/5-TransferLearning/

5-ImageClassifier-keras.ipynb

ImageNet Classification

Trained from Zero

- Uses vast numbers of images

- Huge computational resources

- Does exactly what we told it to do

Transfer Learning

Based on existing model

- Uses pretrained (ImageNet) model

- Leverages it to classify new classes

- Much less training data required

Next Level Learning

- Previous methods learn from large amounts of data

-

- But humans can learn from very little data

- We need models that do the same

- Ideally, we want the models to Learn how to Learn

Meta-Learning

- Two main types of meta-learning :

-

- Learn how to build the best model

- Structure meta-learning

- Build a model that learns quickly

- One-Shot Learning / Few-Shot Learning

- Learn how to build the best model

Structure Meta-Learning

- Problem : It's difficult for humans to build models

- Solution :

-

- Enable computers to build models

- By searching model architectures efficiently

- By predicting which architectures might work well

- Already seen the results...

NASNET Cells

- The NASNet structure is created

-

- ... by searching over architectures

- Better accuracy / speed / power tradeoffs than humans

( not the focus of this talk )

One-Shot Learning

- Humans can learn from few examples

-

- eg : "Hammer-Whisk"

One-Shot Learning

- Want a model that can also learn tasks quickly :

-

- Model should be trained on many tasks

- Each task will only have small amounts of data

( backtrack a little )

Regular-Learning

- Training set:

-

- A bunch of different classes

- Each class has sample images to learn

- Test set:

-

- Can the model classify a previously unseen image?

Meta-Learning

- Training set:

-

- A bunch of different tasks

- Each task is a different problem to learn

-

- Each of those problems has small amounts of data

- Test set:

-

- Can the model learn a previously unseen task quickly?

Hands-On : Meta-Learning

8-MetaLearning/

2-Reptile-Sines.ipynb

Reptile-Sines

- Learn to learn tasks quickly

- Each task in the meta-training set :

-

- Creates a 'sine wave' with random amp. and phase

-

- Training data is just a few points

- Test whether new points can be predicted

- The meta-test sees whether a single task learns quickly

Reptile Model Search

Initialize Φ. the initial parameter vector

for iteration 1,2,3,… do

Randomly sample a task T

Perform k>1 steps of SGD on task T,

starting with parameters Φ, resulting in parameters W

Update: Φ ← Φ + ϵ(W−Φ)

end for

Return Φ

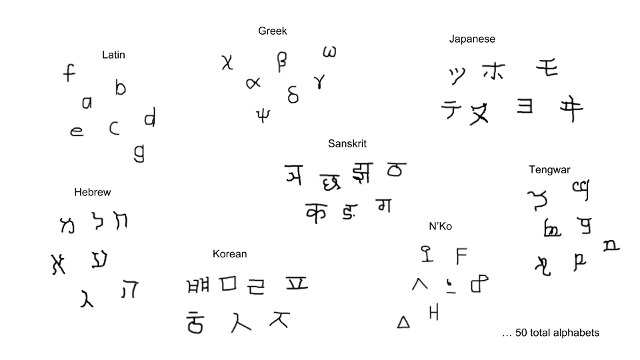

Hands-On : One-Shot Learning

Meta-Learning Demo - '3 boxes'Omniglot Dataset

- 1623 different handwritten characters

-

- from 50 different alphabets

Omniglot Dataset

- Each of the 1623 characters

-

- was drawn by 20 different people

- Compare : MNIST

-

- 10 characters, drawn ~5000 times each

One-shot Classification

- Each task trains on 1 example each for 3 classes :

-

- Model pre-meta-trained on Omniglot

- Actual model running in Javascript

- The user provides the meta-test task

- ... works pretty well

Wrap-up

- Field is advancing very rapidly

- Still within grasp of individuals

- Open source applies to research too

* Please add a star... *

Deep Learning

MeetUp Group

- Next Meeting = ~19-April-2018

-

- Hosted by Google

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

Deep Learning : Jump-Start Workshop

- Dates + Cost : ~S$600 (funding available for SG/PR) :

-

- Full day (week-end)

- Play with real models

- Get inspired!

- Pick-a-Project to do at home

- Regroup on subsequent week-nights

- See SGInnovate Event Page for details

8-week Deep Learning

Developer Course

- 25 September - 25-November

- Twice-Weekly 3-hour sessions included :

-

- Instruction

- Individual Projects

- Support by WSG

- Location : SGInnovate

- Status : FINISHED!

?-week Deep Learning

Developer Course

- Plan : Start in a few months

- Sessions will include :

-

- Instruction

- Individual Projects

- Support by SG govt (planned)

- Location : SGInnovate

- Status : TBA

- QUESTIONS -

Martin.Andrews @

RedCatLabs.com

Martin.Andrews @

RedDragon.AI

My blog : http://blog.mdda.net/

GitHub : mdda