WaveNet(s)

TensorFlow & Deep Learning SG

23 January 2018

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & Papers...

- & Dev Course...

Outline

- WaveNet v1

-

- Why the excitement?

- Key elements

- Implementation & Demo

- Fast-WaveNet

- Parallel-WaveNet

-

- Why the excitement?

- Key elements

WaveNet v1

- DeepMind splash in Sept-2016 :

Key Elements

- Produce audio samples from network

- CNN with dilation

- Sigmoid gate of Tanh units

- Side-chains

- Output of distributions

- Computational burden

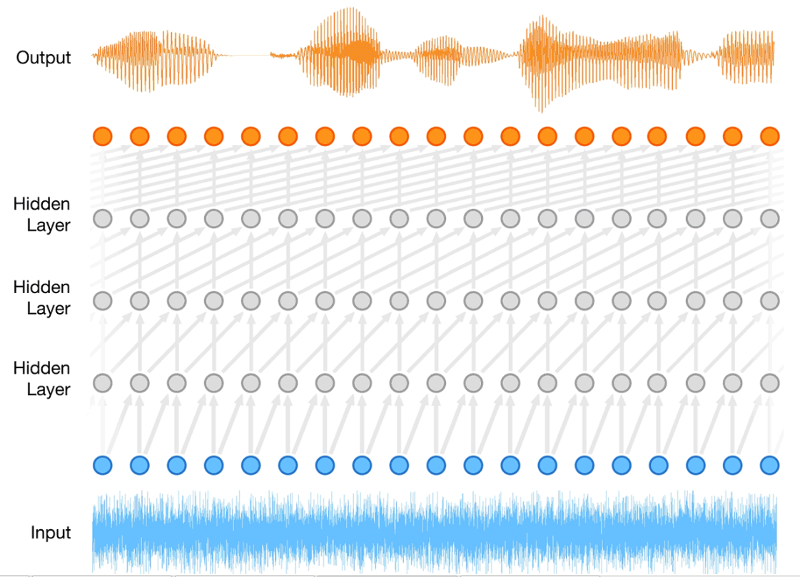

Audio samples

from network

- Data output :

-

- 16 KHz rate (now 24KHz)

- 8-bit μ-law (now 16-bit PCM)

- Very long time-dependencies :

-

- Normal RNNs are limited to ~50 steps

- Word features are 1000s of steps

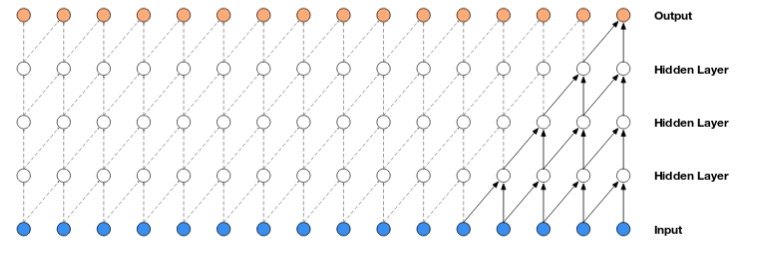

Regular CNNs

Look at the 'linear footprint'

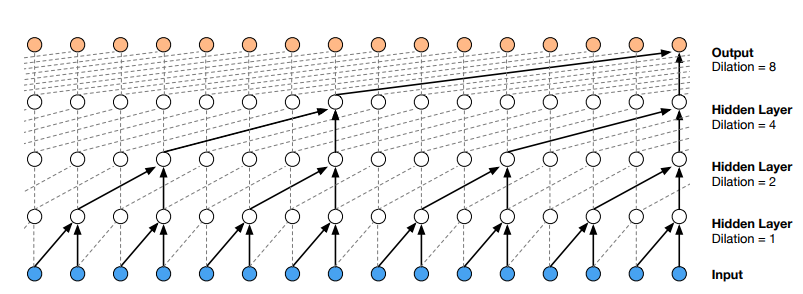

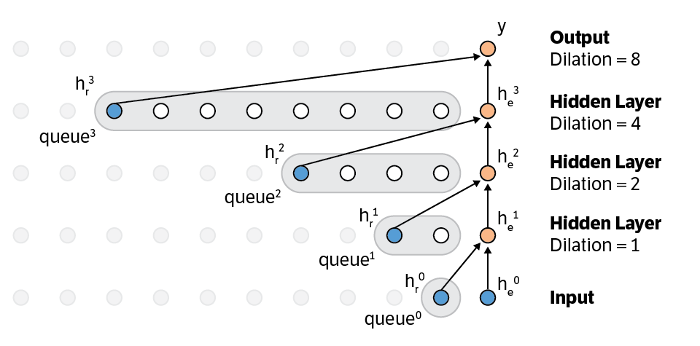

Dilated CNNs

Look at the 'exponential footprint'

CNNs Pro/Con

- Advantages :

-

- Can have very long 'look back'

- Fast to train (see later)

- Disadvantages :

-

- No 'next sample' scheme

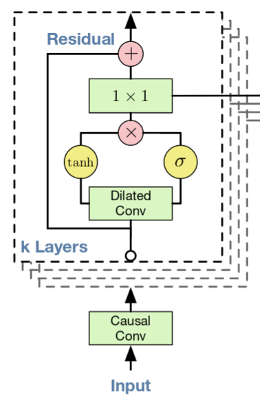

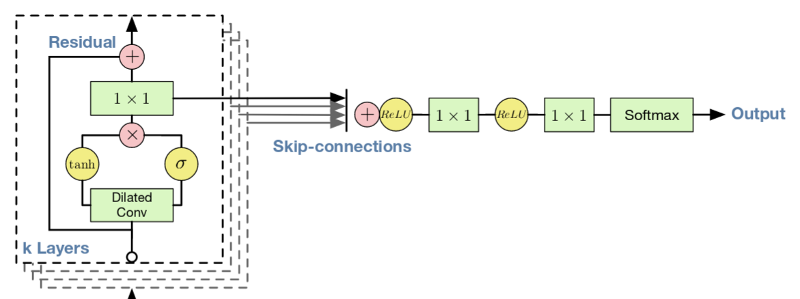

Sigmoid gate of Tanh units

Each CNN node has some complexity

- includes Gating and ResNet idea

Side-chains

Actual 'output' is fed from sideways connections

from all layers

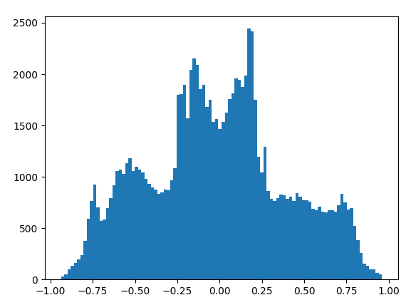

Output of distributions

- Instead of raw audio :

-

- Output a complete distribution for each timestep

- 256x as much work

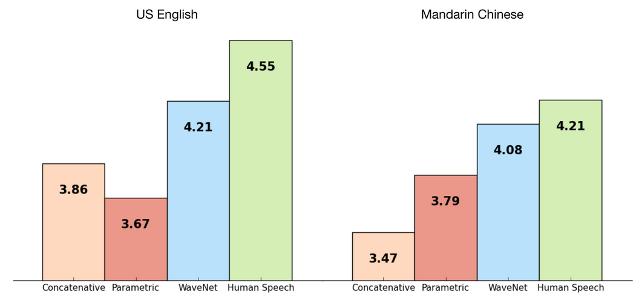

- ... seems crazy, but for the results ...

Computational burden

- Training is QUICK :

-

- All timesteps have known next training samples

- Inference / Running is SLOW :

-

- 1 sec of output = 1 minute of GPU

Implementations

- TensorFlow :

-

ibabcode (most commonly referenced)

- Magenta :

-

- Blog (quick aside : cool projects!)

- NSynth (includes WaveNet)

- Actual Code on GitHub

SG Implementation

- Includes the latest hotness :

-

DataSetAPI forTFRecordsstreaming from diskKerasmodel →Estimator- One Notebook end-to-end

github.com / mdda / deep-learning-workshop

/notebooks/2-CNNs/8-Speech/

SpeechSynthesis_MelToComplexSpectra.ipynb

Fast WaveNet

- Optimise run-time :

-

- Paper : Fast Wavenet Generation Algorithm

- Actual Code on GitHub

- But intrinsic sequential nature remains...

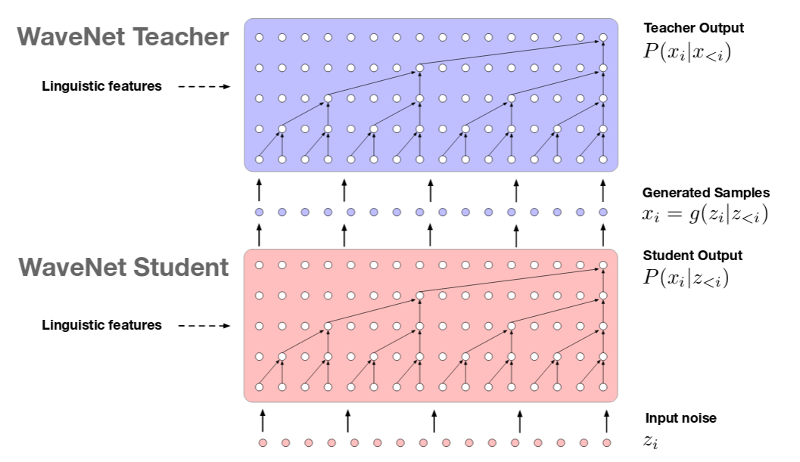

Parallel WaveNet

- Another 'Big Splash' in Oct-2017 :

-

- Blog Post with Assistant announcement

- Only a teaser explanation of why it is now practical

- Followed up with more detail in Nov-2017 :

Goal = Parallel

New Element

Noise → Distribution → Sample → Distribution

(optimise for distributions being the same)

Visual Demo

Wrap-up

- WaveNet started out as very good but very expensive

- ... but that proved it was worth optimising

- Lots of opportunity for innovation

Deep Learning

MeetUp Group

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Next Meeting :

-

- 22-Feb-2017 hosted by Google

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

BONUS!

- Now FREE from Google via Kaggle (aka Colab) :

-

- 12hrs-at-a-time of K-80 GPU

- Google Colab for Fast.ai Medium posting

( Don't use for Mining )

Quick Poll

- Show of Hands :

-

- More in-depth on Eager Mode?

- Text to Speech race (Tacotron, DeepVoice, etc)?

- Speech to Text (ASR) game?

- CloudML?

- Latent space tricks?

- Knowledge base access?

Deep Learning

Back-to-Basics

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Next Meeting :

-

- 6-March-2017 hosted by SGInnovate

- Typical Contents :

-

- Talks for people starting out

- Hopefully not 'typical'

- Questions welcome

8-week Deep Learning

Developer Course

- 25 September - 25-November

- Twice-Weekly 3-hour sessions included :

-

- Instruction

- Individual Projects

- Support by WSG

- Location : SGInnovate

- Status : FINISHED!

?-week Deep Learning

Developer Course

- Plan : Start 2018-Q1

- Sessions will include :

-

- Instruction

- Individual Projects

- Support by WSG (planned)

- Location : SGInnovate

- Status : TBA

Deep Learning : Beginner Course

- Dates + Cost : TBA ::

-

- Full day (week-end)

- Play with real models

- Get inspired!

- Pick-a-Project to do at home

- 1-on-1 support online

- Regroup on a week-night

- http://bit.ly/2zVXtRm

- QUESTIONS -

Martin.Andrews @

RedCatLabs.com

Martin.Andrews @

RedDragon.AI

My blog : http://blog.mdda.net/

GitHub : mdda