Tips and Tricks

TensorFlow & Deep Learning SG

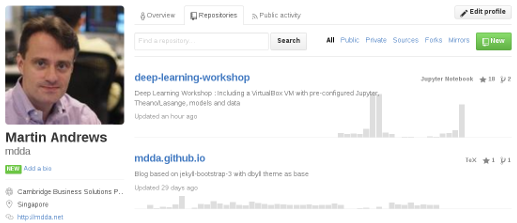

Martin Andrews @ redcatlabs.com

20 July 2017

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & Papers...

Show-of-Hands

No other obligation...

- Been here before?

- Want something easy?

- Want something advanced?

- Watch Engineers.sg?

- Listen to podcasts?

- Want another Beginners Day?

Outline

- Beginner / Newbie

- Day-to-Day user

- Research...

Beginner / Newbie

- Starting Out

- Installation

- Size vs Structure

- Sizing & Complexity

Starting Out

- Lots to watch & read & watch & read & ...

- Better : Set a deadline, and do something

- Install → MNIST → Blog+GitHub

Installation

- Windows vs OSX vs Linux

- Find newest instructions

- Upgrade ⇒ breakage

GitHub models

- Is this the best starting point?

- Is this using the same versions?

- Don't underestimate "taste"

Size vs Structure

- Common concerns :

-

- How many layers?

- How wide?

- What activation function?

- Better to look at bigger picture

Sizing

- Get something that works :

-

- Train for ~15mins

- Should show improvement

- Make small, but definite changes

- Prove that it has an effect

Complexity

- Do a back-of-envelope estimate :

-

- 1 training example per parameter

- Keep images small

- Can you do the task in <1 sec?

- Keras :

model.summary() - Natural Language Processing :

-

- Embeddings : 50d should work

- Vocab >8k is nice-to-have

Day-to-Day

- Simple → Complex

- CPU vs GPU

- Improving model performance

- Understand limitations

- Complex → Simple

Simple → Complex

- Errors very difficult to reason about

- Make sure model works at every step

- When in trouble : Make it trivial

See code example

CPU vs GPU

- Experiment cycle speed is key

- No GPU to play with?

-

- If this is 'serious' then spend the money

- One local GPU, use cloud for more

- Keras :

model.fit_generator()

Improving model

performance

DropOut(generalisation via ensembles)BatchNorm-

- or 'cleaner'

LayerNorm - or simple

WeightNorm(parameter-free)

- or 'cleaner'

- Adaptive gradient methods

-

Adam,Adadelta, etcRMSPropis~BatchNormfor gradients

- Hyperparameter search? Meh

Understand Limitations

- Vary network structure

- Have a hypothesis before you run

- Explain what went wrong

Complex → Simple

- When something works super-well

-

- Try to destroy it

- Smaller may be better...

- Or the NN may be cheating

Research

- Implement papers

- Reproducibility

- Dare to Succeed

Implement Papers

- À la Andrew Ng

- Read → Implement → Improve

- Publish on arXiv & conference

Reproducibility

- Fix baseline data and model

- Random Seed(s)

- Add a

layoutparameter - Don't forget how to program

github.com / mdda / deep-learning-workshop

/notebooks/2-CNN/7-Captioning/

4-run-captioning.ipynb

Dare to Succeed

- Deep Learning is super-new

- Need something harder than MNIST...

- No need to be in MAFIA's shadow

Wrap-up

- Beginners : Start!

- Day-to-day : Experimentation

- Research : Don't be timid

- QUESTIONS -

Martin.Andrews @

RedCatLabs.com

My blog : http://mdda.net/

GitHub : mdda

Deep Learning

MeetUp Group

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Next Meeting :

-

- XX-August-2017 @ Google : Topic == "Mobile"

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

8-week Deep Learning

Developer Course

- Sept - Oct+

- 2x Weekly 3-hour sessions will include :

-

- Instruction

- Projects : 3 structured & 2 self-directed

- Pre-register : http://RedCatLabs.com/course

- ( more later )