Extracting Names using RNNs

PyDataSG Jan-2017

Martin Andrews @ redcatlabs.com

23 January 2017

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & Papers...

RNNs for NLP

- Natural Language Processing

- Neural Networks

-

- Basics

- Word Embeddings

- Recurrent Neural Networks

- Workshop : UPPER-CASE NER

- More exotic examples

Natural Language Processing

- Work with text input

- Applications in :

-

- Text Analysis

- Translation

- Knowledge Extraction

Digression : Basic Neural Networks

- Basic building blocks are not magical

- Simple mathematical units ...

- ... combine to compute a complex function

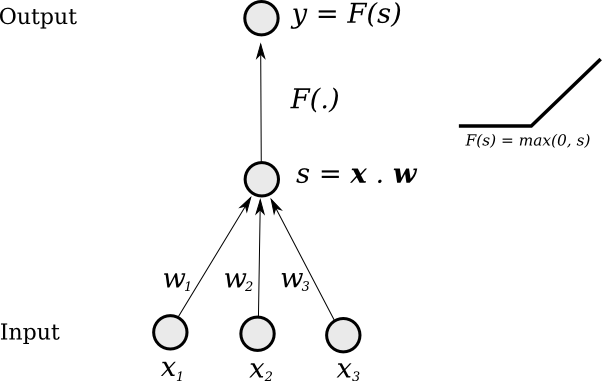

Single "Neuron"

Change weights to change output function

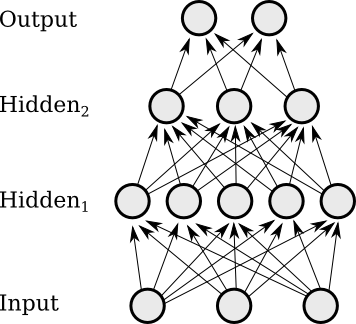

Multi-Layer

Layers of neurons combine to

form more complex functions

Supervised Learning

- while not done :

-

- Pick a training case (

x→target_y) - Evaluate

output_yfrom thex - Modify the weights so that

output_yis closer totarget_yfor thatx

- Pick a training case (

Backpropagation

- How much 'blame' to assign to a weight?

- If it is connected to an output : Easy

- Otherwise, calculate :

-

- Blame to assign to each neuron in layer before output

- Treat each of these errors as a source of error signal

- Walk back through network

- Same complexity as forward pass

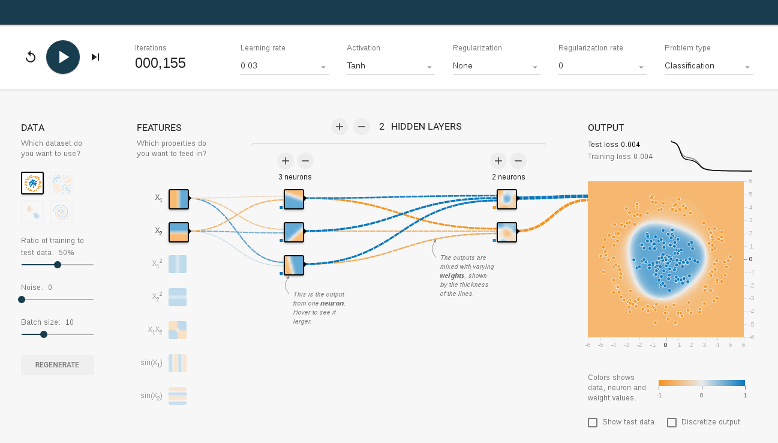

What's Going On Inside?

- Time to look at :

-

- Input features

- What each neuron is learning

- How the training converges

Workshop : Internals

-

- Go to the Javascript Example : TensorFlow

(or search online for TensorFlow Playground)

TensorFlow Playground

NNs for Images

- Add 'convolutions' and depth

- Can map images to classes

NNs for NLP?

- Problem : Is a word a feature?

- Problem : How long is a sentence?

Handling Words

- English ~100k words

- A 'one-hot' input seems wasteful

- Learn about word inter-relationships from corpus?

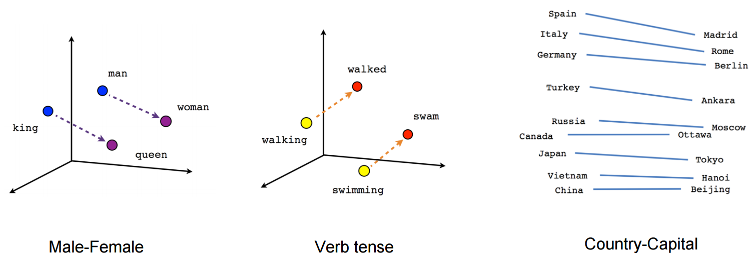

Word Embeddings

- Major advances : word2vec & GloVe

- Basic idea : assign a vector (~300d) to each word

- If words co-occur in sliding window across text, vectors should be close too

- Keep iterating until 'good enough'

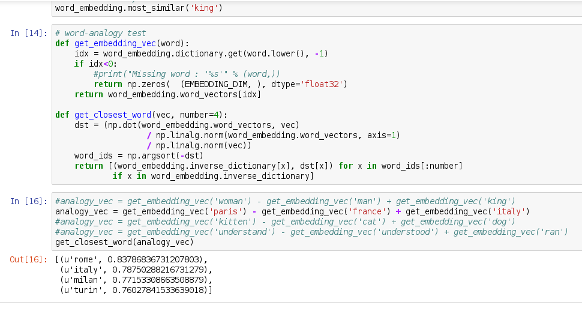

Word Embedding

- A map from

"token"→Float[100] - Train over corpus on windows of words

- Self-organizes...

(eg: word2vec or GloVe)

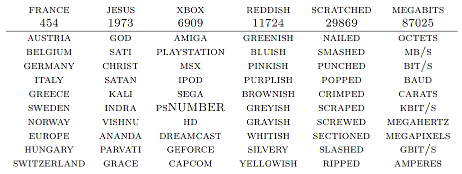

Embedding Visualisation

Highlighting Analogies

Networks on Sequences

Variable-length input doesn't "fit"

- Process each timestep

- ... with the same network

- But 'pass along' internal state

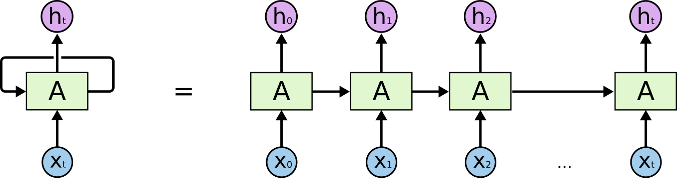

Recurrent Neural Networks

- Apply a network iteratively over input

- Internal state carried forward step-wise

- Everything is still differentiable

Basic RNN

RNN chain

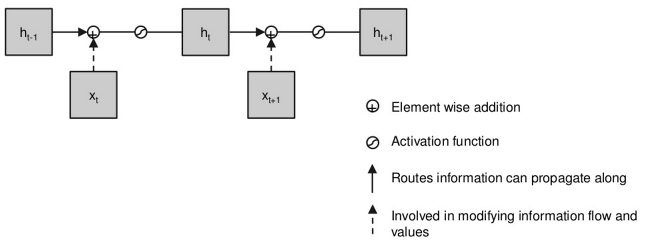

Chaining State

- Each node 'knows' history

- ... all weights are 'tied'

- Network depth is time-wise

Basic RNN

Simplest RNN (but has gradient problem)

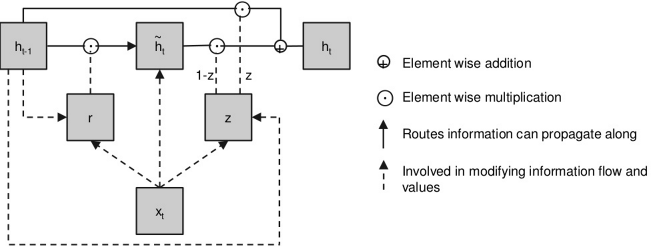

Gated Recurrent Units

A GRU

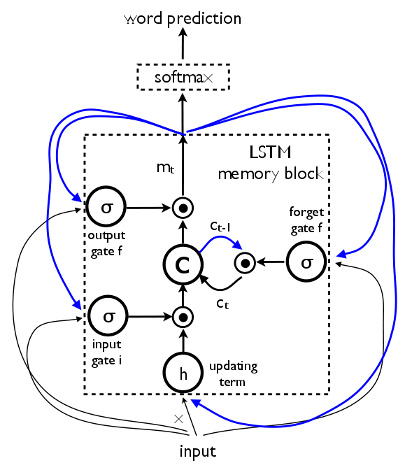

LSTM Units

A Long Short-Term Memory (LSTM) Unit

Piled Higher and Deeper

- Can also pile up layers

- ... and run forwards and backwards

Key Point

- Everything is still differentiable

- So : RNNs can be trained

- So : RNNs can learn to do NLP

- QUESTIONS -

NER : Motivation

- Build a quality NLP system for a Singapore startup

- Essential component : Named Entity Recognition (NER)

- Cannot use most existing NLP/NER systems

- Cannot use expensive data

NER : Quick Example

- Transform :

-

- Soon after his graduation, Jim Soon became Managing Director of Lam Soon.

- Into :

-

- Soon after his graduation,

Jim_Soon PERbecame Managing Director ofLam_Soon ORG.

- Soon after his graduation,

Learning Named Entity Recognition

- Can we train an RNN to do 'NER'?

- Steps :

-

- Create a word embedding

- Get an annotated dataset

- Train an RNN on the dataset

Choosing a Dataset

- Human annotated Corpora are difficult to distribute :

-

- Use NLTK to annotate Wikipedia

- Train RNN on machine annotations

- Look at performance vs NLTK

Let's Make it "Interesting"

- Twist : Restrict RNN input to single case text

9-RNN-Tagger

Learning to do ~NER

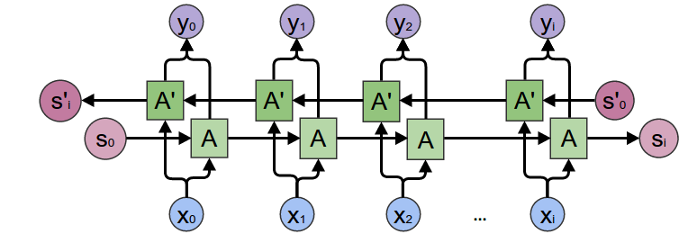

Network Picture

Bidirectional RNN

- QUESTIONS -

More Exotic

- Sentences as Input == Working

- Can we Output sentences too?

- Where can this lead?

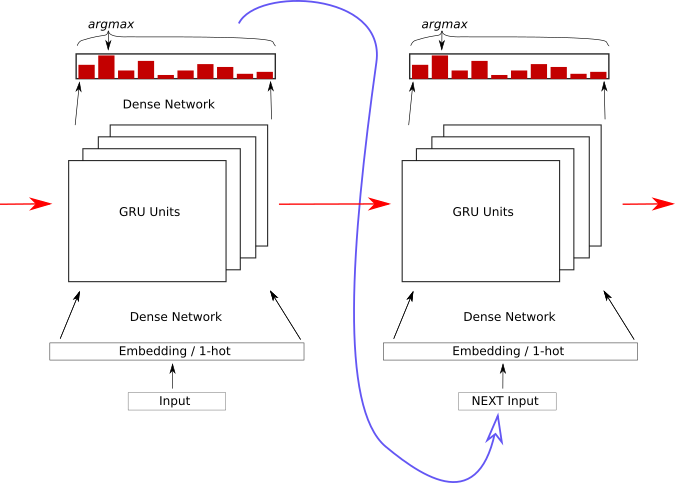

Feed Outputs to Inputs

- Each node outputs vector

- Can softmax-choose 'answer'

- Feed that 'answer' in as next input

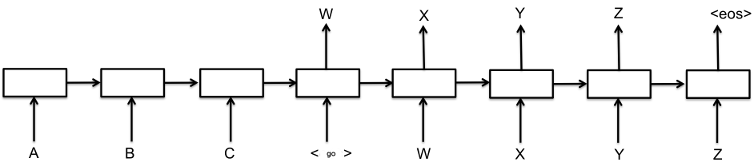

Network Picture

Each output becomes the next input

8-Natural-Language

Still a work-in-progress (training takes too long)

Poetry : Epoch 1

JDa&g#sdWI&MKW^gE)I}<UNK>f;6g)^5*|dXdBw6m\2&XcXVy\ph8G<gAM&>e4+mv5}OX8G*Yw9&n3XW{h@&T\Fk%BPMMI

OV&*C_] ._f$v4I~$@Z^&[2

mOVe`4W)"L-KClkO]wu]\$LCNadyo$h;>$jV7X$XK#4,T(y"sa6W0LWf\'_{\#XD]p%ck[;O`!Px\#E>/Or(.YZ|a]2}q|@a9.g3nV,U^qM $+:nlk0sd;V-Z&;7Y@Z "l-7P^C

"xBF~~{n} n\ Pcbc9f?=y)FIc1h5kvjIi

C<UNK>s DWJr_$ZQtu"BTYm'|SMj-]Z<Vqj*.lh%IYW|q.GK:eNI"r>833?+RuUsOj_)a{\T}gH.zZR^(daC3mg5P0iFi]bqGo4?T|\>0_H&g889voTh=~)^DDRYND46z1J]x;<U>>%eNIRckL)N8n<UNK>n3i)+Ln8

?)9.#s7X]}$*sxZ"3tf ")

@'HW.;I5)C.*%}<jcNLN+Z__RWoryOb#

/`r

Poetry : Epoch 100

Som the riscele his nreing the timest stordor hep pIs dach suedests her, so for farmauteds?

By arnouy ig wore

Thou hoasul dove he five grom ays he bare as bleen,

The seend,

And, an neeer,

Whis with the rauk with, for be collenss ore his son froven faredure:

Then andy bround'd the CowE nom shmlls everom thoy men ellone per in the lave ofpen the way ghiind, thour eyes in is ple gull heart sind, I I wild,

Frreasuce anspeve, wrom fant beiver, not the afan

And in thou' histwish a it wheme-tis lating ble the liveculd;

Noorroint he fhallought, othelts.

Poetry : Epoch 1000

AWhis grook my glass' to his sweet,

Bub my fears liken?

And of live every in seedher;

A Lood stall,

But tare tought than thencer sud earth,

Use'st bee sechion,

For all exprit' are a daud in heaven doth her infook perust the fork the tent.

For maud,

The pittent gover

This and rimp,

Who new

Thoir oldes and did hards, cound.

Plays : Epoch 338

Larger network...

DEDENIUS Why shoulmeying to to wife,

And thou say: and wall you teading for

that struke you down as sweet one.

With be more bornow, bly unjout on the account:

I duked you did four conlian unfortuned drausing-

to sicgia stranss, or not sleepplins his arms

Gentlemen? as write lord; gave sold.

AENEMUUNS Met that will knop unhian, where ever have

of the keep his jangst?icks he I love hide,

Jach heard which offen, sir!'

[Exit PATIIUS, MARGARUS arr [Enter CLOTHUR]

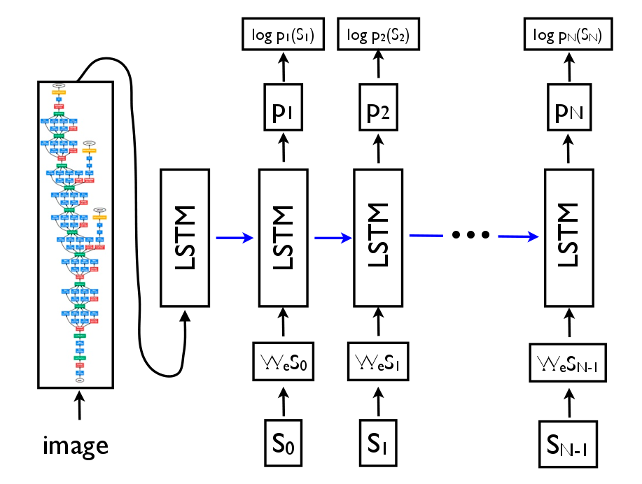

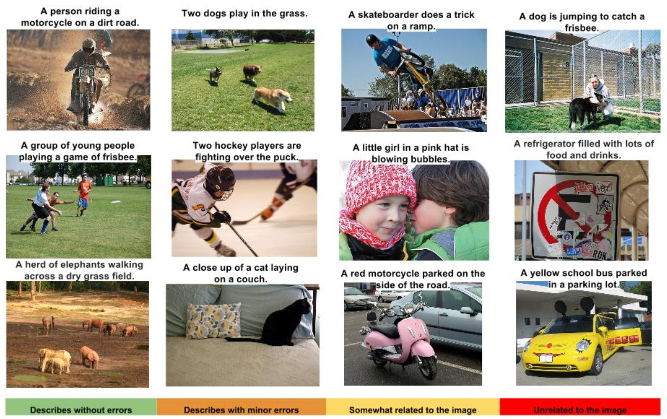

Image Labelling

We have the components now

Image Labels

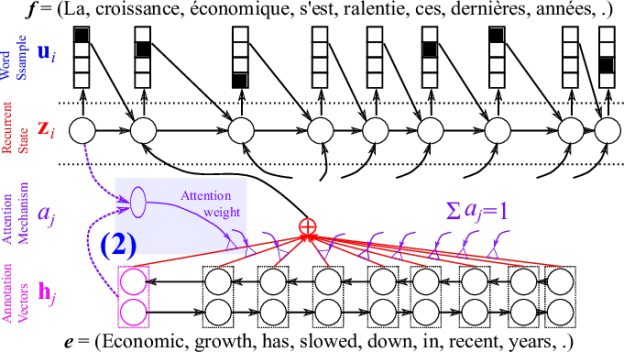

Sequence-to-Sequence

Encoder Network feeds hidden state to Decoder Network

Attention Network

Differentiable → Training : Even crazy stuff works!

- QUESTIONS -

Conclusion

- Deep Learning also applicable to NLP

- Language structure not as 'natural' as images

- Still in its early stages

* Please add a star... *

Feedback

Martin.Andrews @

RedCatLabs.com

My blog : http://mdda.net/

GitHub : mdda

*NEW* Deep Learning MeetUp Group

- "TensorFlow and Deep Learning Singapore"

-

- Sponsored by Google

- First Meeting = 16-Feb-2017 :

-

- Introduction to TensorFlow

- TensorFlow Summit recap

- Something from the bleeding-edge

Hiring

- Full-Time

-

- 'Commercial NLP'

- Intern

-

- Prototyping, demos, etc