Captioning

TensorFlow & Deep Learning SG

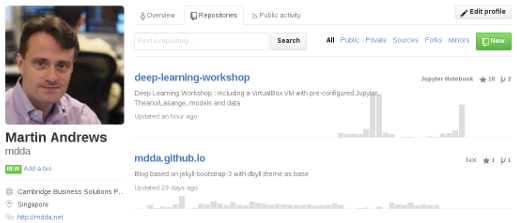

Martin Andrews @ redcatlabs.com

22 June 2017

About Me

- Machine Intelligence / Startups / Finance

-

- Moved from NYC to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- Since 2015 = 'serious' :: NLP + deep learning

-

- & Papers...

Outline

- Intro to tools :

-

- Dense, CNN, RNN, Embedding

- Goal : Captioning

- Sequence Learning

- Embedding choice?

- Model choice!

- Demo (with voice-over)

Quick Review

- Basic Neuron : Simple computation

- Layers of Neurons : Feature creation

http://redcatlabs.com/

2017-03-20_TFandDL_IntroToCNNs/

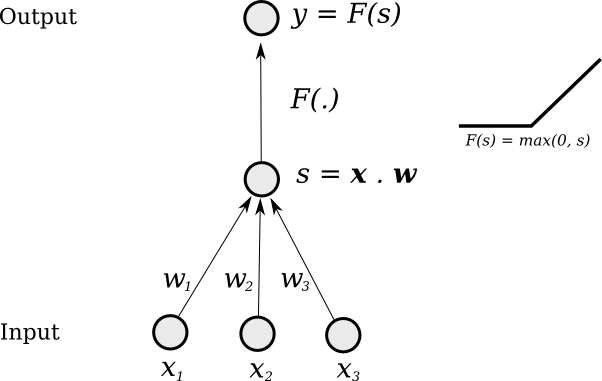

Single "Neuron"

Change weights to change output function

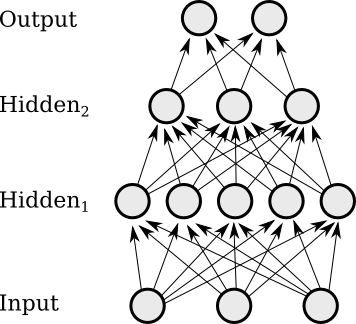

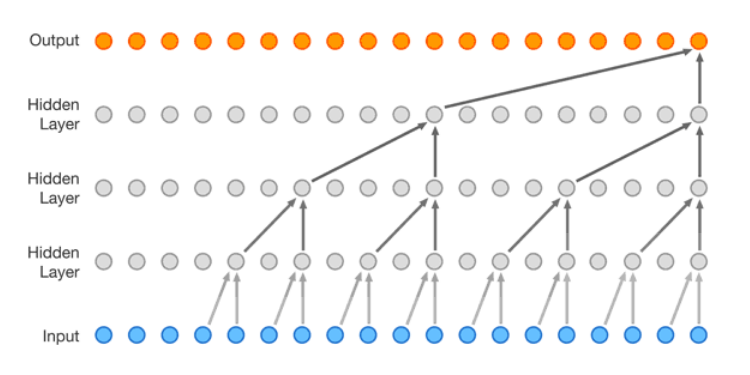

Multi-Layer

Layers of neurons combine and

can form more complex functions

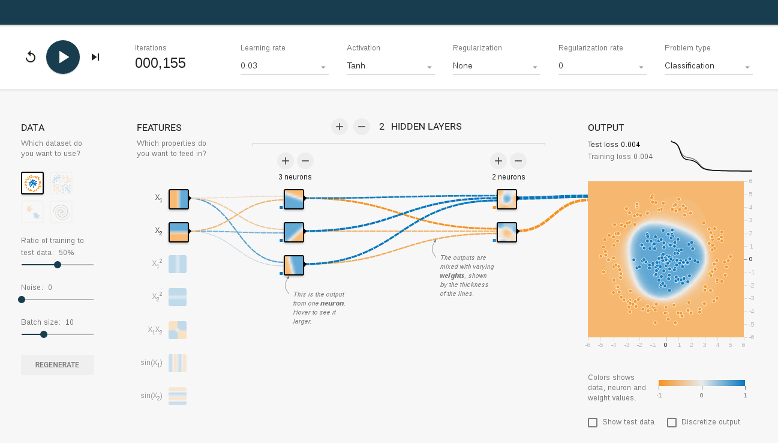

TensorFlow Playground

http://playground.tensorflow.org/

Main take-aways

- Goal : Predict Output for a given Input

- Train using known Input and Output data

- The blame game (aka Gradient Descent)

- Deep networks 'create' features

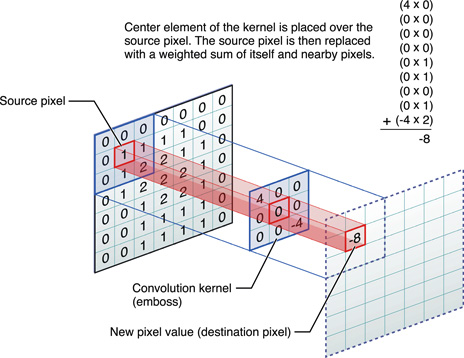

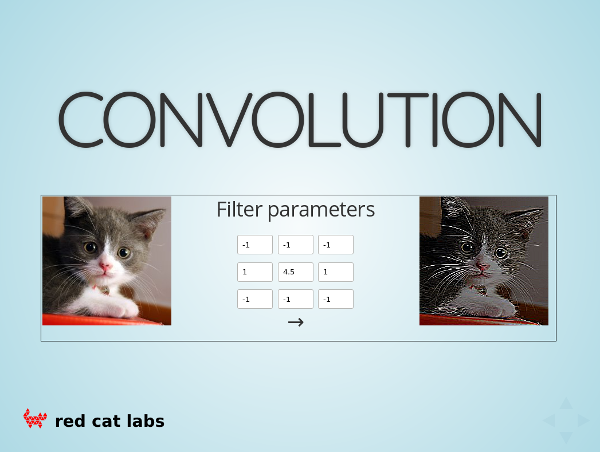

Processing Images

- Pixels in an images are 'organised'

- Idea : Use whole image as feature

-

- Update parameters of 'Photoshop filters'

- Mathematical term : 'convolution kernel'

-

- CNN = Convolutional Neural Network

CNN Filter

Play with a Filter

http://redcatlabs.com/

2017-03-20_TFandDL_IntroToCNNs/

CNN-demo.html

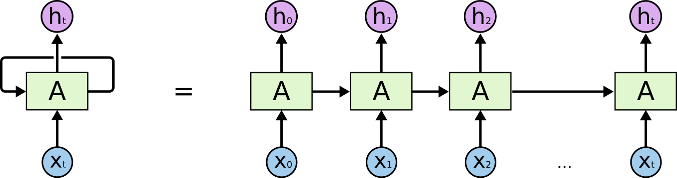

Processing Sequences

Variable-length input doesn't "fit"

- Run network for each timestep

-

- ... with the same parameters

- But 'pass along' internal state

- This state is 'hidden depth'

-

- ... and should learn features that are useful

- ... because everything is differentiable

Basic RNN

RNN chain

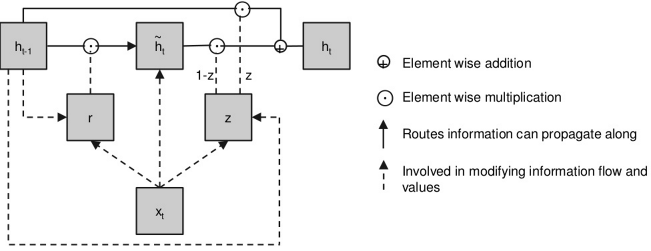

Gated Recurrent Units

A GRU

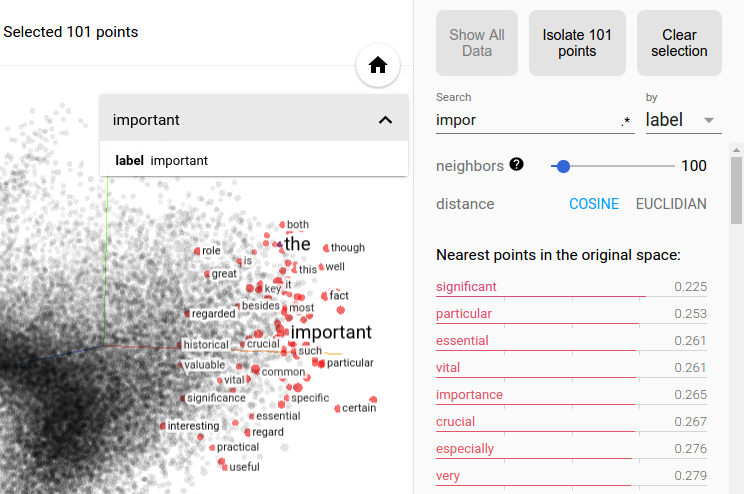

Word Embeddings

- Major advance in ~ 2013

- Words that are nearby in the text should have similar representations

- Assign a vector (~300d) to each word

-

- Slide a 'window' over the text (1Bn words?)

- Word vectors are nudged around to minimise surprise

- Keep iterating until 'good enough'

- The vector-space of words self-organizes...

Embedding in a Picture

TensorBoard FTW!

Image → Caption

- large brown dog running away from the sprinkler in the grass .

- a brown dog chases the water from a sprinkler on a lawn .

- a brown dog running on a lawn near a garden hose

- a brown dog plays with the hose .

- a dog is playing with a hose .

Data Set : Flickr30k

- Summary statistics :

-

- 31,783 images

- 158,915 human-created captions

- Attribution-style licensing :

-

- P. Young, A. Lai, M. Hodosh, and J. Hockenmaier. From image description to visual denotations: New similarity metrics for semantic inference over event descriptions

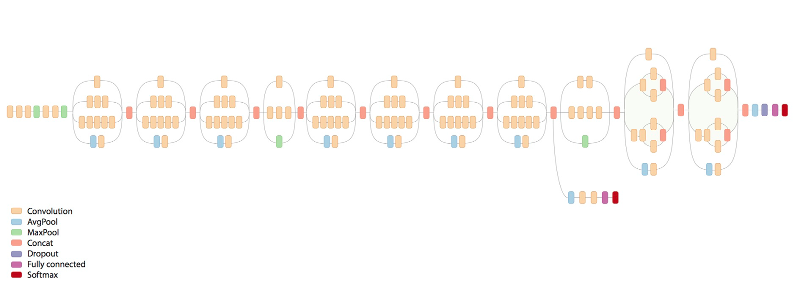

Flickr30k : Feat(Image)

- Featurize all the images using InceptionV3

Flickr30k : Feat(Text)

- Want to make sure captions are learnable

-

- Only use captions with "common-enough" words

- All words must be in 5 different images

- All words must be in GloVe 100k (50d) embedding

- Ensure 'stop' words are at start of dictionary

Sequences from

Networks

- Word-by-word (Test)

- Teacher forcing (Training)

- Embedding choices

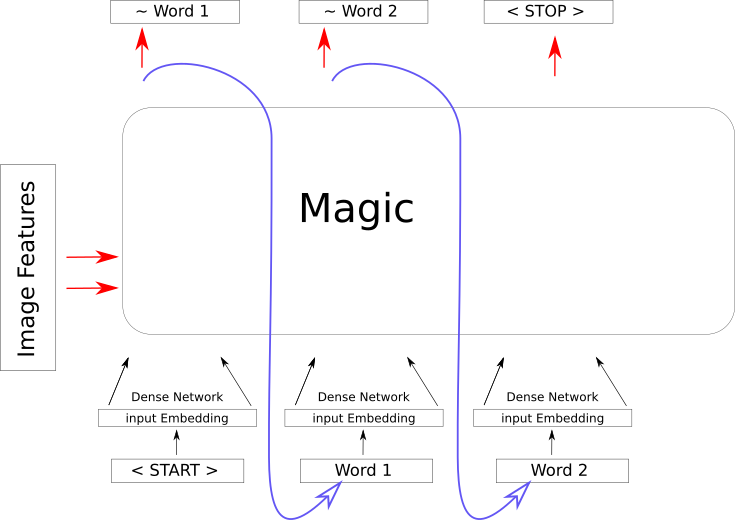

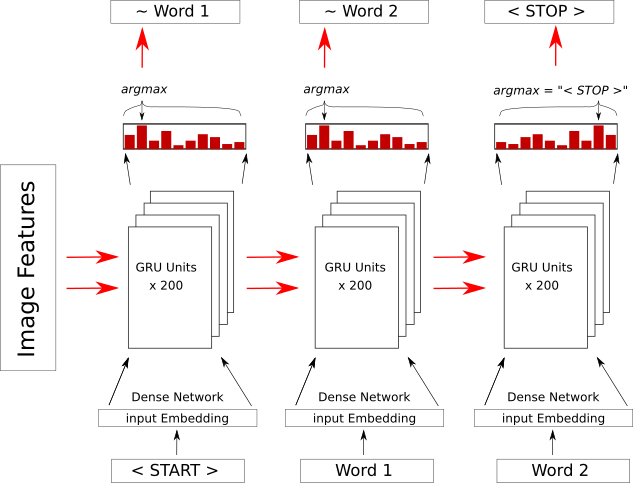

Generating Sequences

Basic Layout : Test Time

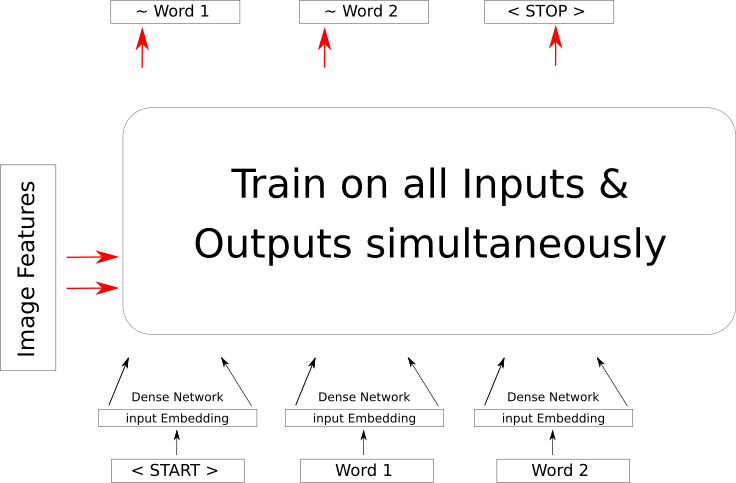

Training Time

"Teacher Forcing"

Embedding Choices

- Word Vector

- One-Hot embedding

- Use each word's numeric index

Word Vectors

- Fixed dimension, independent of vocab size

- Stop words may be 'murky'

- Action words need definitions

- Often used as input stage

One-Hot

- Vocab ~7k ⇒

vector.len == 7000 - Very high number of 0/1 inputs

- Often used as output stage

idx = ArgMax( Softmax() )

Binary Index

- Low dimensionality

-

- 14 binary digits for 7k vocabulary

- Difficult to believe it works

- Add resilience using ECC

Combos

- Action+Stop words : 141-d

- Word Embedding : 50-d

- Concatenate them

- Use as input stage

New Machinery

- Dilated CNNs

- BatchNorm

- Residual connections

- Gated Linear Units

- Fishing Nets

- Attention-is-all-you-need Layer

Dilated CNNs

DeepMind : WaveNet

Conv1D(padding='causal', dilation_rate=4)

BatchNorm

- Fix activation/parameter explosion problems

- New Layer that learns scaling parameters :

-

- Squash layer to

~N(0,1) - In Keras :

BatchNorm()

- Squash layer to

- Newer ideas : LayerNorm (not in Keras)

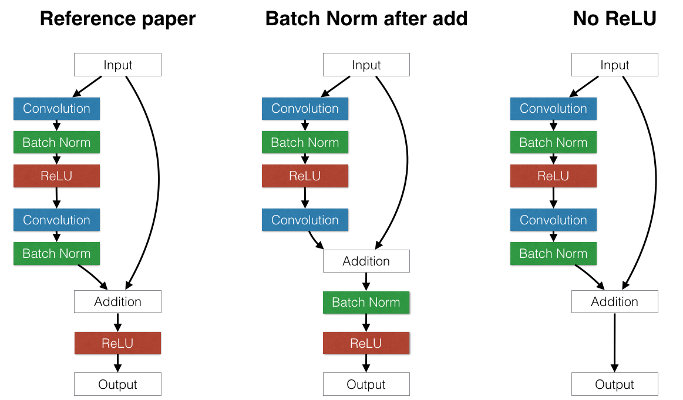

Residual Connections

Introduced by Microsoft in their ResNet Paper

Skip connections now very common

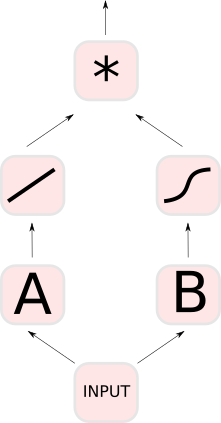

Gated Linear Units

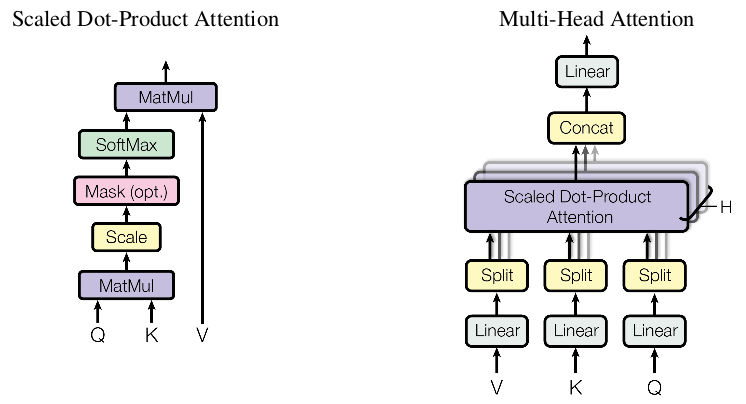

Attention-is-all-you-need Layer

See the very recent Google paper

Network Picture 1

'Standard' GRU set-up

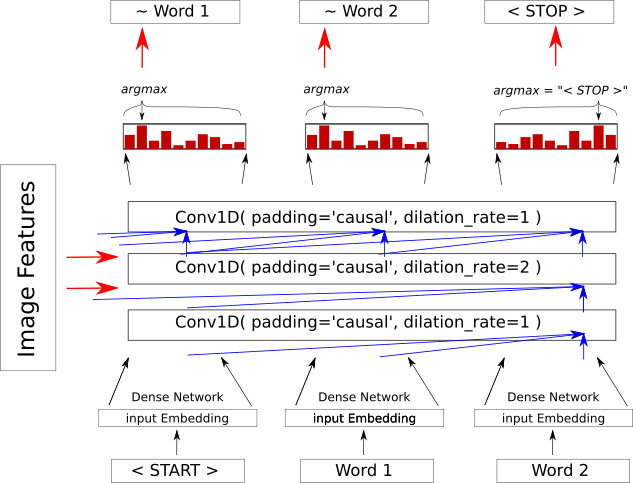

Network Picture 2

Dilated CNN set-up (many variants)

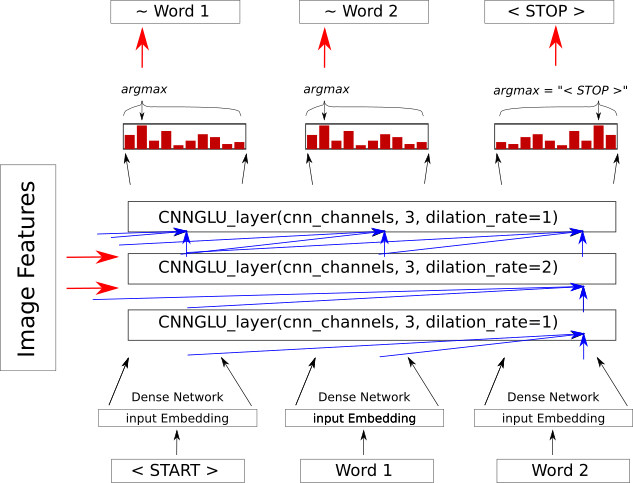

Network Picture 3

Facebook CNN set-up (radically simplified)

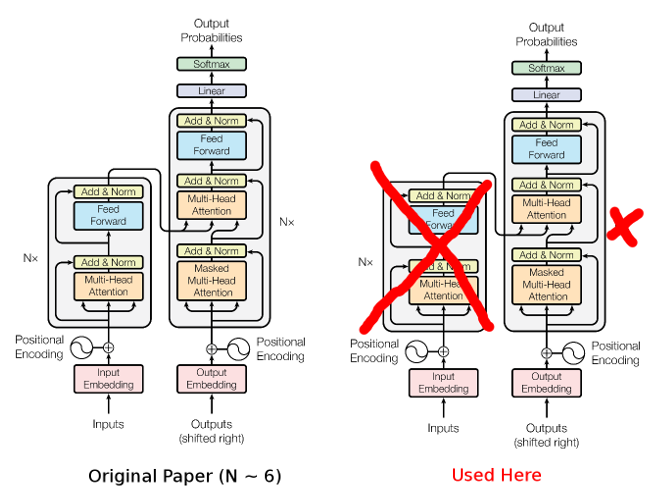

Network Picture 4

Attention is All you Need (Google, T+7 days)

Walk-Through

github.com / mdda / deep-learning-workshop

/notebooks/2-CNN/7-Captioning/

4-run-captioning.ipynb

Image → Caption :

UnTrained

- cables burning gracefully pin shine spoons arrange marshy solar board briefs claps tickets survey disinterested tractor looked movies guns rows engine technical town plaza fat captain paddlers historic motorcyclist soccer scales arabian

- does crown items bug pause ink what kayakers ohio lettering bikes battle squeezing person clad

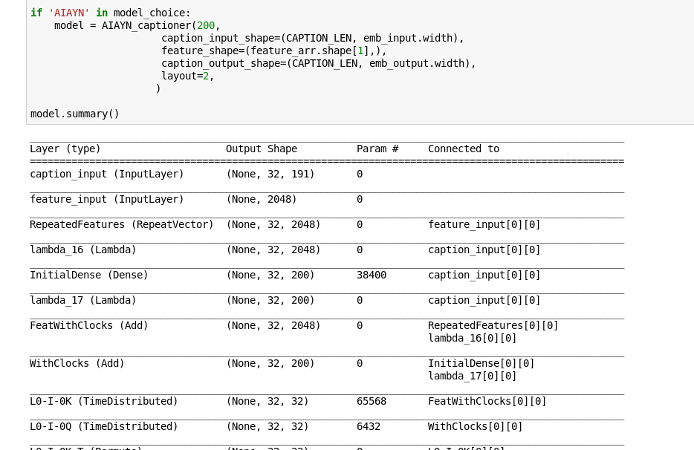

Typical Training

- Input is 141d one-hot + 50d embedding

- Output is ~7,000 softmax one-hot

- Internal width ~200 units

- No special learning rate adjustments

- 50 epochs take ~ 3.5 hrs

Image → Caption : GRUs

- a black dog running on a park .

- two big dogs play ball across the grass .

- the dog is being blocked by three other men each of it to something .

- a dog chases a ball while a man in a vest holding the hand .

- a man and a dog are chasing with a frisbee in the grass .

Results : Dilated CNN

- the brown dog is standing on a yard .

- one dog bites another baseball player has found behind in the background .

- a dog running in a field leaps onto a field .

- two brown dogs are playing with a ball at a park .

- a brown dog runs his white dog while he is running along in winter grass .

Results :

Gated-Linear-Units

- a gray dog is running on a grass field .

- a dog jumping off over a bush .

- a dog on a leash is near a fountain .

- a brown dog is running through the muddy rain .

- a one dog with a brown jacket is playing in an enclosed setting .

Results : AIAYN

- two dogs play in the grass .

- two dogs race by the two dogs fight to a grassy yard .

- the brown dogs lead beside two fire .

- two colored dog on a dogs to a metal tunnel .

- one dog chases after a brown dog on the park .

Wrap-up

- This session was more challenging

- Lots of innovation in NLP

- Having a GPU is VERY helpful

- QUESTIONS -

Martin.Andrews @

RedCatLabs.com

My blog : http://mdda.net/

GitHub : mdda

Deep Learning

MeetUp Group

- MeetUp.com / TensorFlow-and-Deep-Learning-Singapore

- Next Meeting :

-

- 20-July-2017 @ Google : "Tips & Tricks"

- Typical Contents :

-

- Talk for people starting out

- Something from the bleeding-edge

- Lightning Talks

Deep Learning : 1-day Intro

- Level : Beginner+

- Date : 24-June-2017

- Basic plan :

-

- 9:30am-4pm+ on a Saturday

- Play with real models

- Ask questions 1-on-1

- Get inspired

Cost: S$15 (lunch included)FULL

8-week Deep Learning

Developer Course

- July - Sept (catch-up during August)

- Weekly 3-hour sessions will include :

-

- Instruction

- Projects : 3 structured & 2 self-directed

- More information : http://RedCatLabs.com/course

- Expect to work hard...