Named Entity Recognition through Learning from Experts

IES-2015

Martin Andrews @ redcatlabs.com

24 November 2015

Outline

- Motivation

- Approach

- Model

- Results

- Further Work

- Conclusions

Motivation

- Building NLP system for Singapore client

- NER is an essential component

- Existing systems are not usable

NER : Quick Example

- Transform :

-

- Soon after his graduation, Jim Soon became Managing Director of Lam Soon.

- Into :

-

- Soon after his graduation,

Jim Soon PERbecame Managing Director ofLam Soon ORG.

- Soon after his graduation,

Existing Systems

- Licensing problems

- Speed problems

- Need for flexibility

Approach

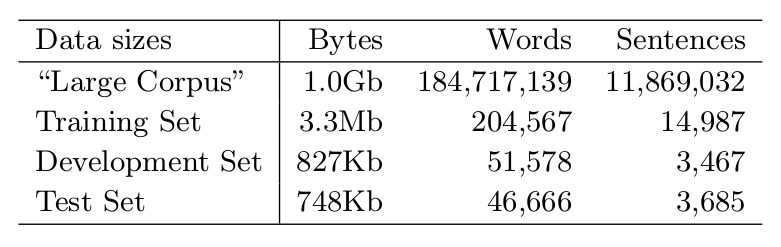

- Use existing available corpus

- Use existing 'experts' ...

- ... to annotate the corpus

- Train new system on annotations

CoNLL 2003

- NER language task at the 2003 Conference on Natural Language Learning

- 16 systems 'participated'

- Top 5 F1 scores (±0.7%):

-

- 88.76%; 88.31%; 86.07%; 85.50%; 85.00%

The Experts

- For this work, used two leading NER systems:

-

- Stanford NER (part of CoreNLP)

- MITIE (from MIT)

- Other contenders:

-

- Berkeley Entity Resolution System

- Illinois Named Entity Tagger (*)

Annotate Corpus

Learn from Experts

- Build a model

- Train on annotations

- Throw away experts

- Use 'DeepNER' standalone

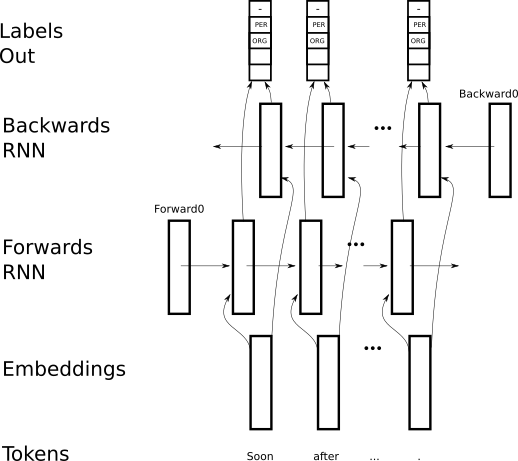

The Model

- Word Embedding

- Bi-Directional RNN

- Training Regimes

Word Embedding

- A map from

"token"→Float[100] - Train over corpus on windows of words

- Self-organizes...

(used word2vec)

BiDirectional RNN

BiDirectional RNN

- Python / Theano, using

blocksframework - Training runs on GPU

- Final model can run on CPU

x = tensor.matrix('tokens', dtype="int32")

x_mask = tensor.matrix('tokens_mask', dtype=floatX)

lookup = LookupTable(config.vocab_size, config.embedding_dim)

x_extra = tensor.tensor3('extras', dtype=floatX)

rnn = Bidirectional(

SimpleRecurrent(activation=Tanh(),

dim=config.hidden_dim,

),

)

# Need to reshape the rnn outputs to produce suitable input here...

gather = Linear(name='hidden_to_output',

input_dim = config.hidden_dim*2,

output_dim = config.labels_size,

)

Origin : Berkeley, now with industry buy-in

Training Regimes

- Typical run : 100,000,000 sentences

- Variations include :

-

- Single expert

- Multiple experts

- Original data

- Combined experts

Results

- Dataset quirks

- Labelling speed

- F1 scores

- Ensembling

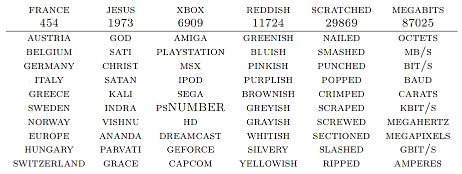

Dataset Quirks

- Corpus is Reuters news over 10 month period

- Test data is just one month

-

- Huge numbers of sports scores

- Scores are very difficult to parse

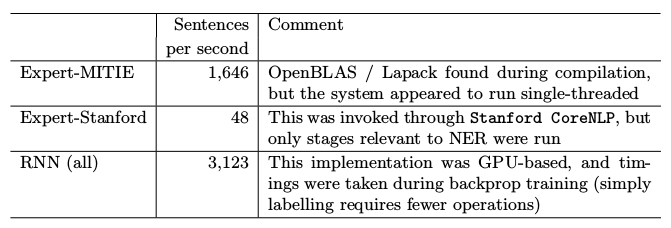

Labelling speed

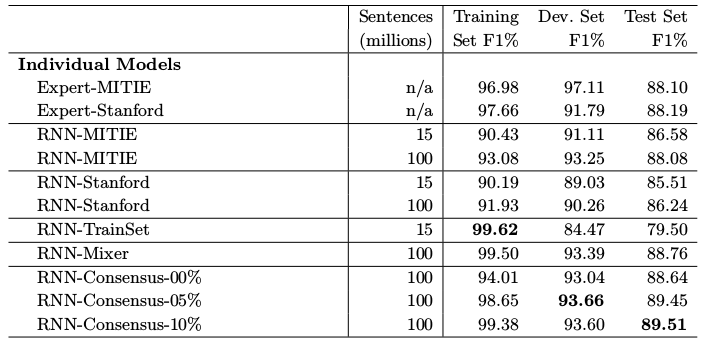

F1 Scores on CoNLL 2003

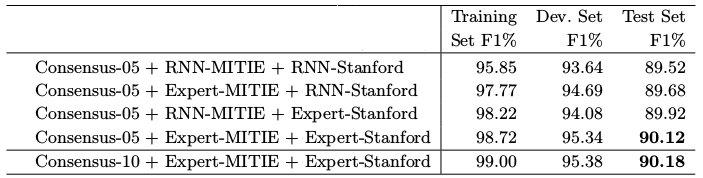

Ensemble F1 Scores

Further Work

- Implement on ASEAN-specific corpus

-

- Company-internal...

- Per-letter NER using RNN

Conclusions

- Really works...

- This is a Deep Learning system that :

-

- Exceeds capabilities of teachers

- Executes faster

- Technique means that secret sauce is data

- QUESTIONS -

Martin.Andrews @

RedCatLabs.com

My blog : http://mdda.net/

GitHub : mdda

- BLANK -

Open Source

- Compare :

-

- "Open Source is great because it costs nothing"

- "Scientific research is great because it costs nothing"

- Open Source is Big-F Free

-

- The F is for Freedom

- Everyone can contribute

- karma++

Your Name Here...