Deep Learning Workshop

PyConSG 2016

Martin Andrews @ redcatlabs.com

23 June 2016

About Me

- Machine Intelligence / Startups / Finance

-

- Moved to Singapore in Sep-2013

- 2014 = 'fun' :

-

- Machine Learning, Deep Learning, NLP

- Robots, drones

- "MeetUp Pro"

- Since 2015 = 'serious' :: NLP + deep learning

Deep Learning Now

in production in 2016

- Speech recognition

- Language translation

- Vision :

-

- Object recognition

- Automatic captioning

- Reinforcement Learning

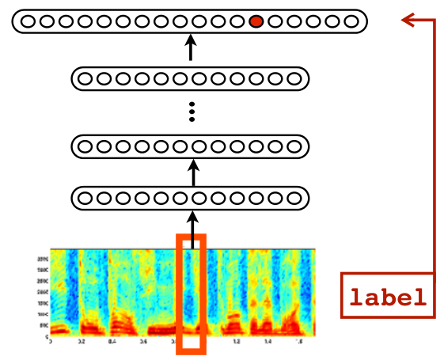

Speech Recognition

Android feature since Jellybean (v4.3, 2012) using Cloud

Trained in ~5 days on 800 machine cluster

Embedded in phone since Android Lollipop (v5.0, 2014)

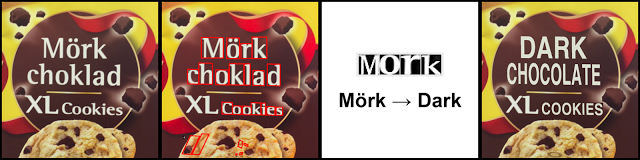

Translation

Google's Deep Models are on the phone

"Use your camera to translate text instantly in 26 languages"

Translations for typed text in 90 languages

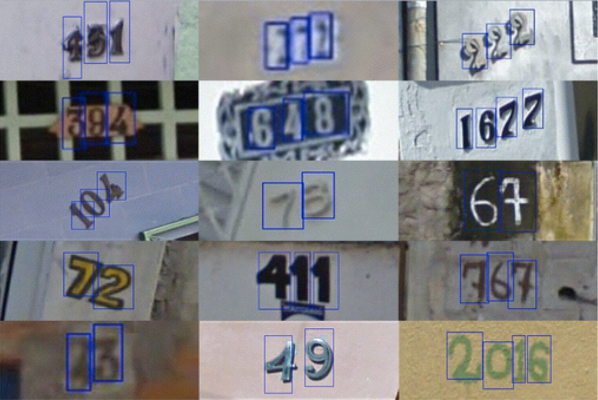

House Numbers

Google Street-View (and ReCaptchas)

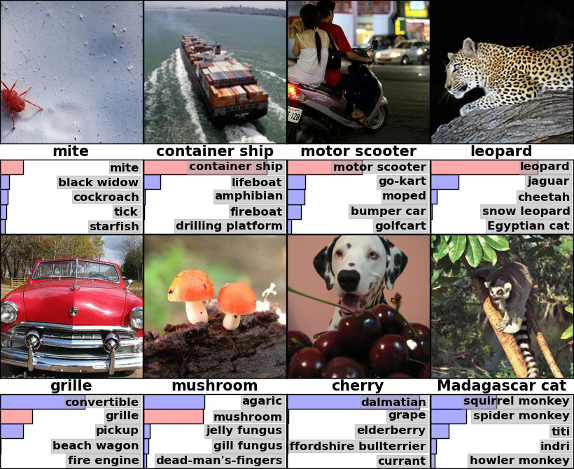

ImageNet Results

(now human competitive on ImageNet)

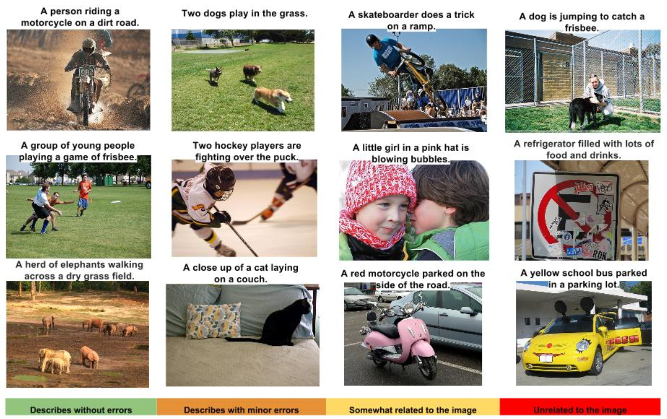

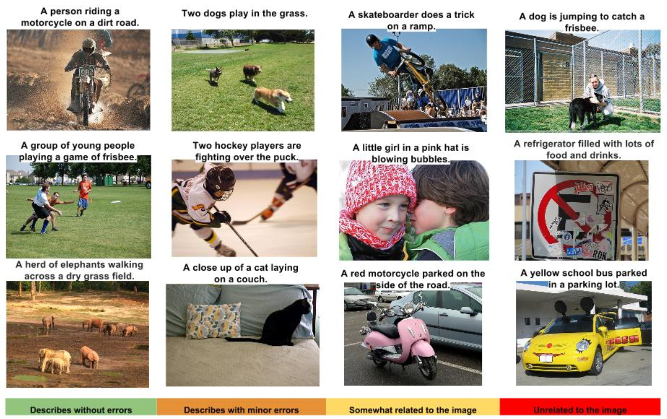

Captioning Images

Some good, some not-so-good

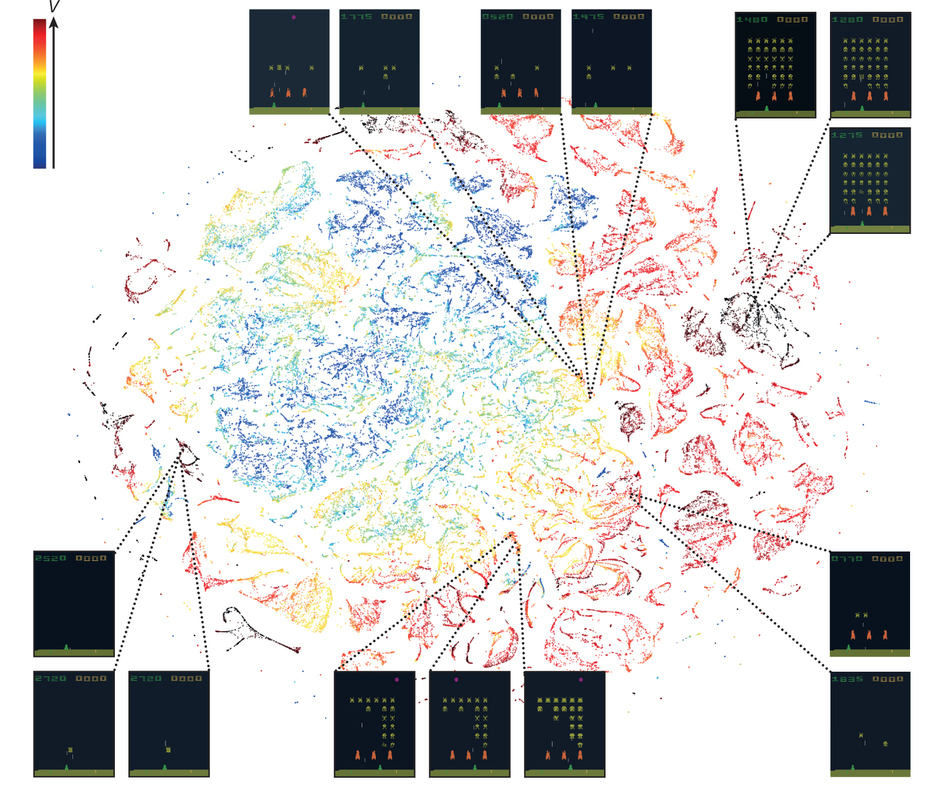

Reinforcement Learning

Google's DeepMind purchase

Learn to play games from the pixels alone

Better than humans 2 hours after switching on

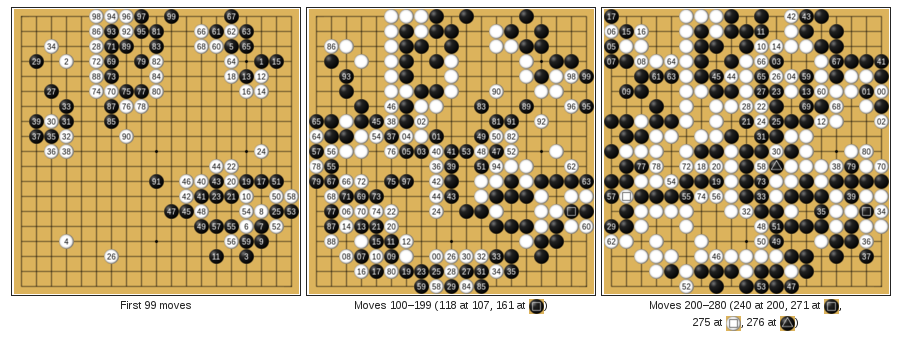

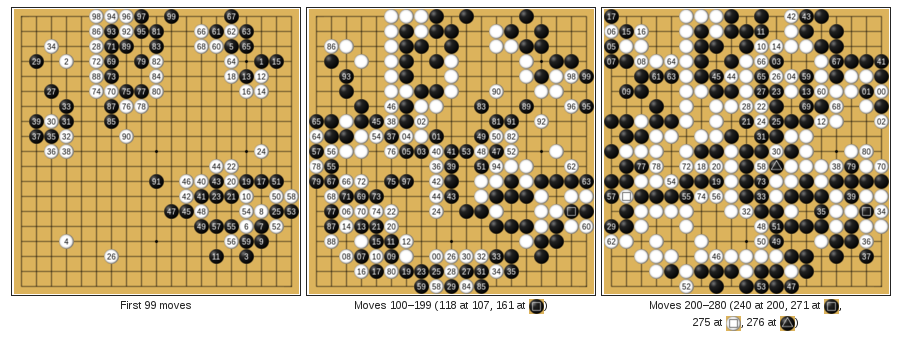

Reinforcement Learning

Google DeepMind's AlphaGo

Learn to play Go from (mostly) self-play

"A.I. Effect"

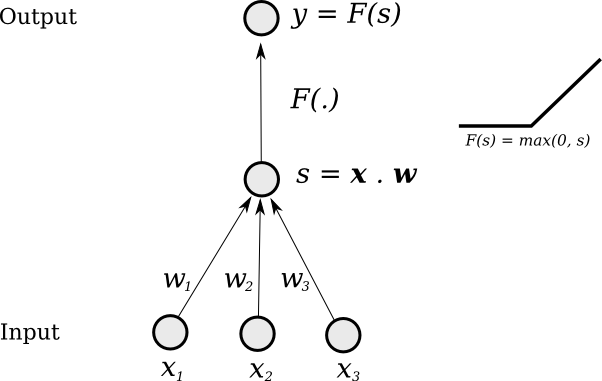

Basic Foundation

- Same as original Neural Networks in 1980s/1990s

- Simple mathematical units ...

- ... combine to compute a complex function

Single "Neuron"

Change weights to change output function

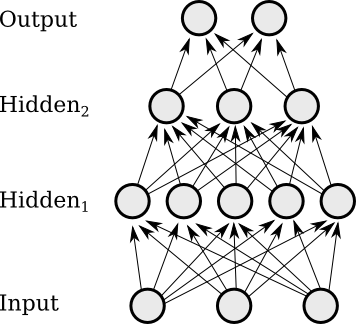

Multi-Layer

Layers of neurons combine and

can form more complex functions

Supervised Learning

- while not done :

-

- Pick a training case (

x→target_y) - Evaluate

output_yfrom thex - Modify the weights so that

output_yis closer totarget_yfor thatx

- Pick a training case (

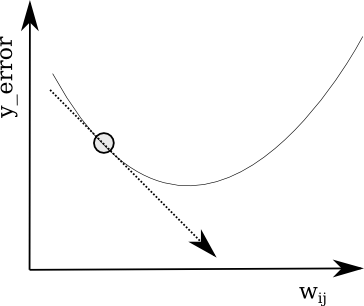

Gradient Descent

Follow the gradient of the error

vs the connection weights

Training a Neural Network

- Time to play with :

-

- Layers of different widths

- Layers of different depths

- "Stochastic Gradient Descent" (SGD)

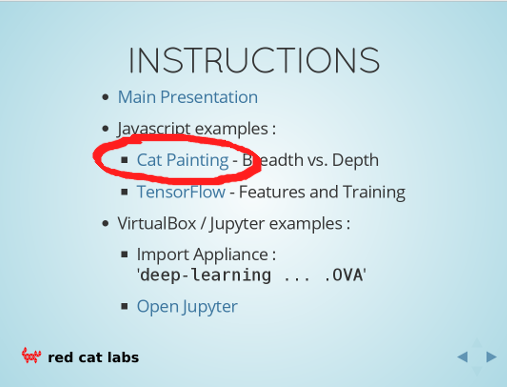

Workshop : SGD (local=BEST)

-

- Go to the Javascript Painting Example

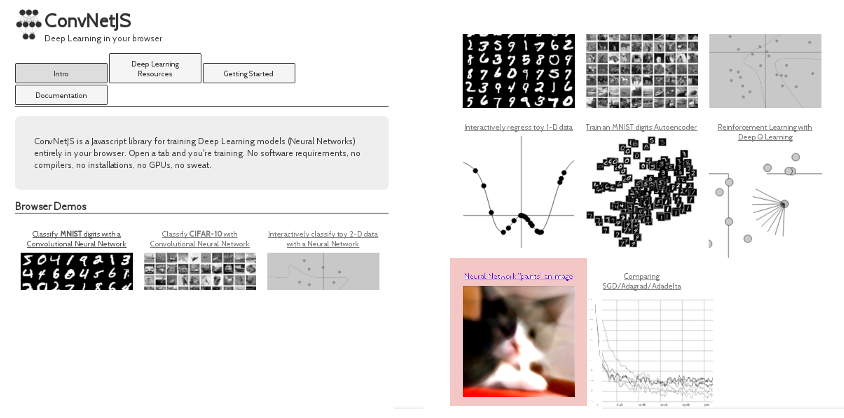

Workshop : SGD (online)

-

- Go to :

http://ConvNetJS.com/ - Look for : "Image 'painting'"

- Go to :

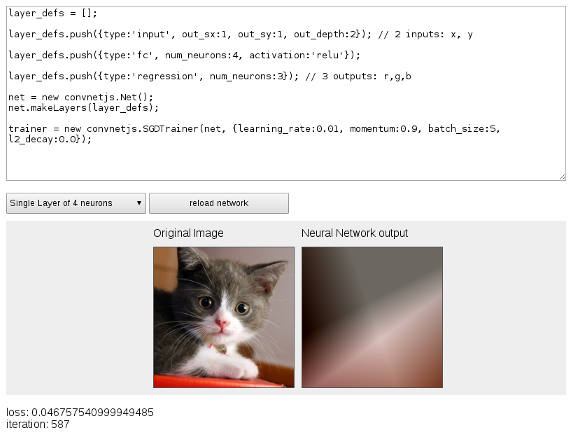

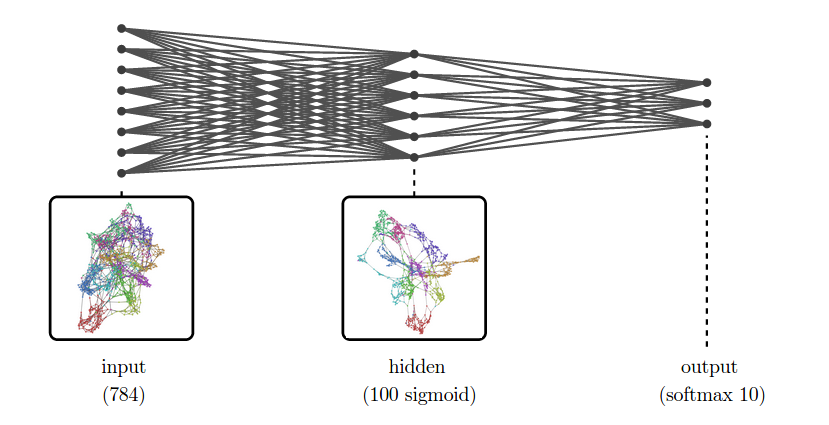

Simple Network

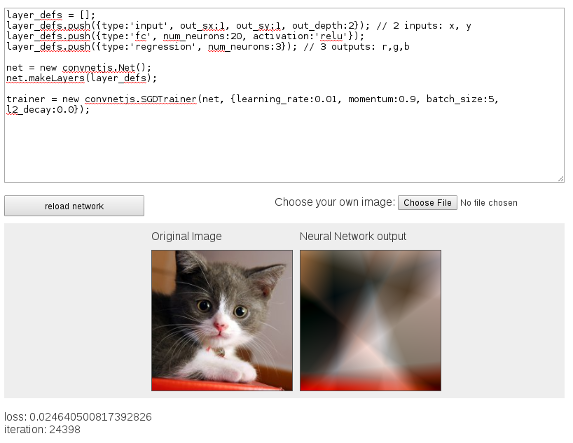

Wider Network

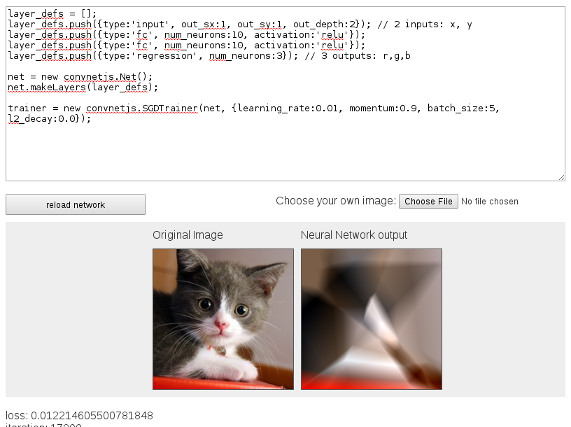

Two-Ply Network

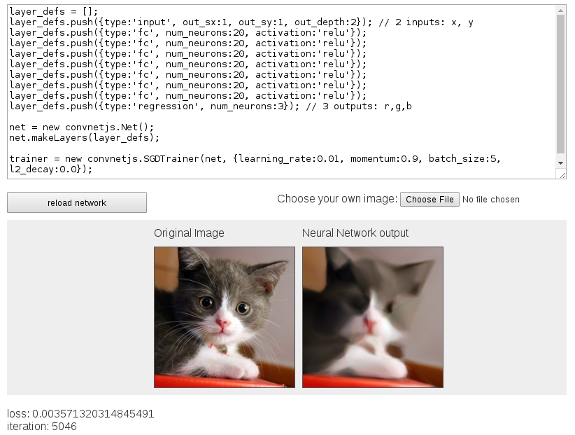

Deep Network and Time

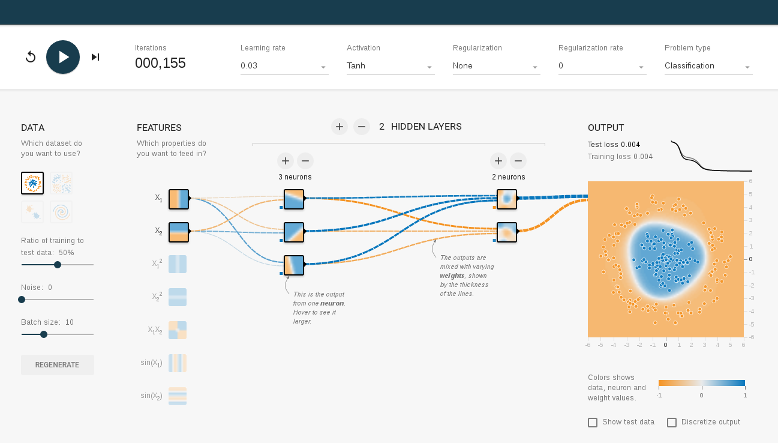

What's Going On Inside?

- Time to look at :

-

- Input features

- What each neuron is learning

- How the training converges

Workshop : Internals

-

- Go to the Javascript Example : TensorFlow

(or search online for TensorFlow Playground)

TensorFlow Playground

Workshop : VirtualBox

- Import Appliance '

deep-learning ... .OVA' - Start the Virtual Machine...

Workshop : Jupyter

- On your 'host' machine

- Go to

http://localhost:8080/

Other VM Features

- There is no need to

ssh- it should Just Work - But if you want to have a poke around...

- From your 'host' machine :

ssh -p 8282 user@localhost

# password=password

- or have a look at the code on GitHub...

Frameworks

- Want to express networks at a higher level

- Map network operations onto cores

- Most common frameworks :

-

- Caffe - C++ ~ Berkeley

- Torch - lua ~ Facebook/Twitter

- Theano - Python ~ Montreal Lab

- TensorFlow - C++ ~ Google

Theano

- Optimised Numerical Computation in Python

- Computation is described in Python code :

-

- Theano operates on expression tree itself

- Optimizes the tree for operations it knows

- Makes use of

numpyandBLAS - Also writes

C/C++orCUDA(orOpenCL)

0-TheanoBasics

Use the 'play' button to walk through the workbook

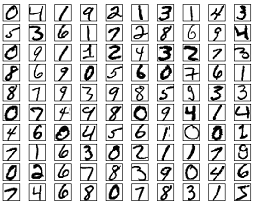

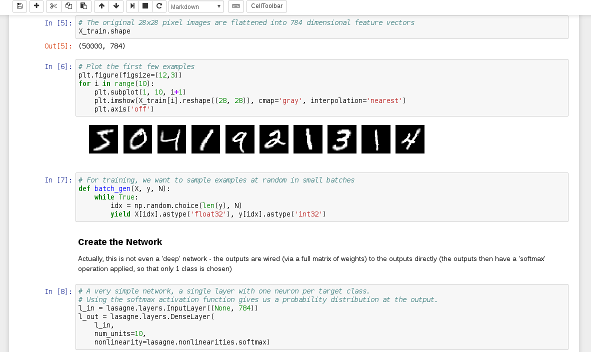

"Hello World" → MNIST

- Nice dataset from the late 1980s

- Training set of 50,000 28x28 images

- Now end-of-life as a useful benchmark

Simple Network

... around 2-3% error rate on the test set

1-MNIST-MLP

We using lasagne as an additional layer on top of Theano

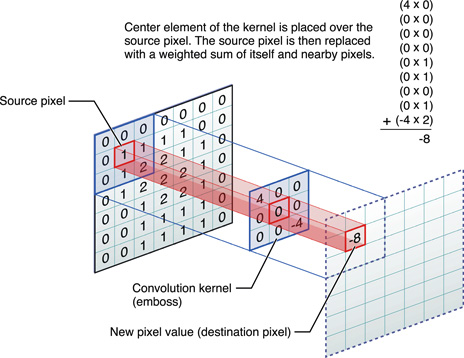

Convolution Neural Networks

- Pixels in an images are 'organised' :

-

- Up/down left/right

- Translational invariance

- Can apply a 'convolutional filter'

- Use same parameters over whole image

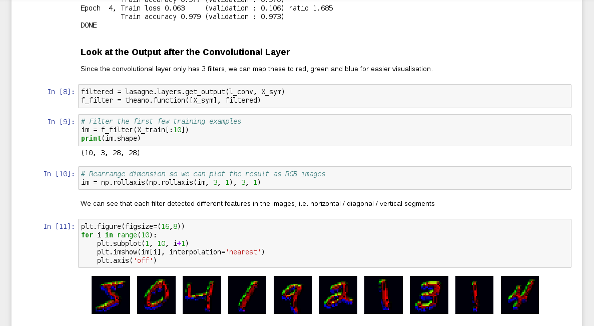

CNN Filter

2-MNIST-CNN

Three filter layers - nice visual interpretation

New Problems

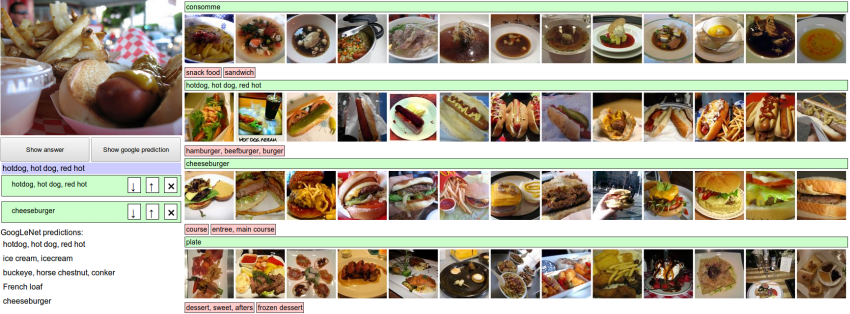

- ImageNet Competition

- over 15 million labeled high-resolution images...

- ... in over 22,000 categories

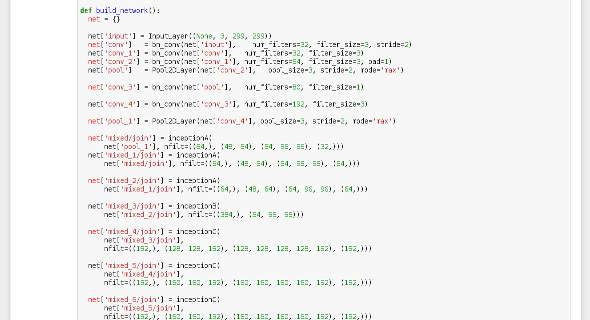

More Complex Networks

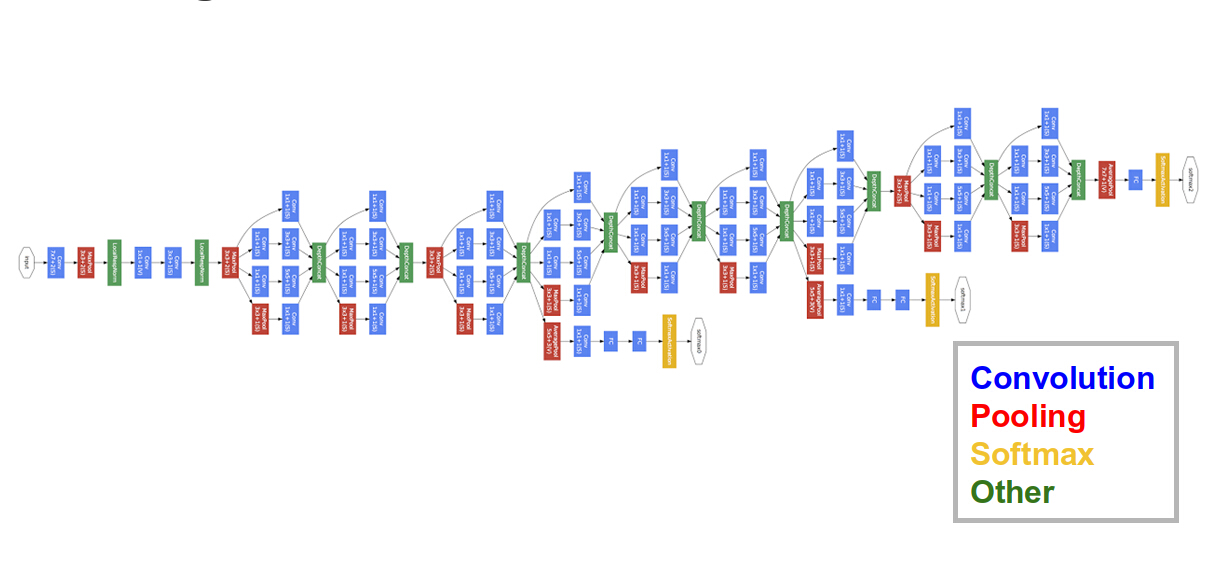

GoogLeNet (2014)

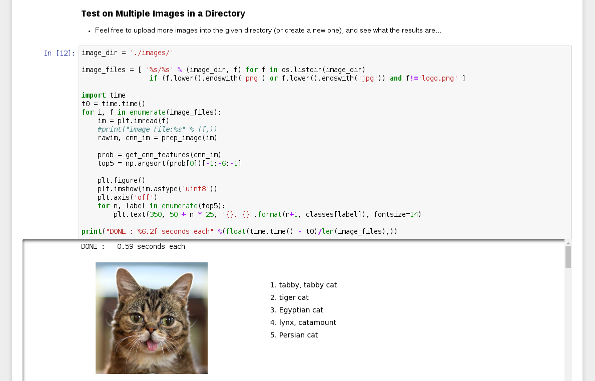

3-ImageNet-googlenet

Play with a ~2014 pre-trained network

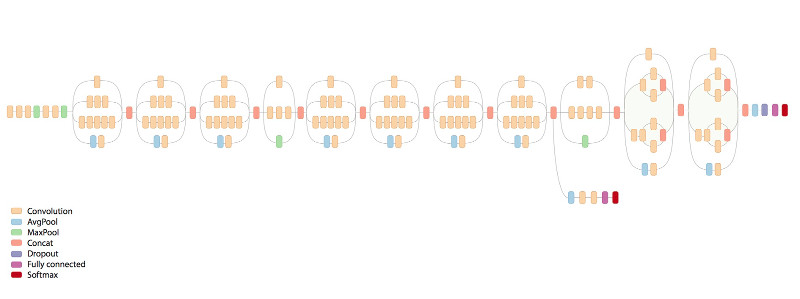

... Even More Complex

Google Inception-v3 (2015)

4-ImageNet-inception-v3

Play with a ~2015 pre-trained network

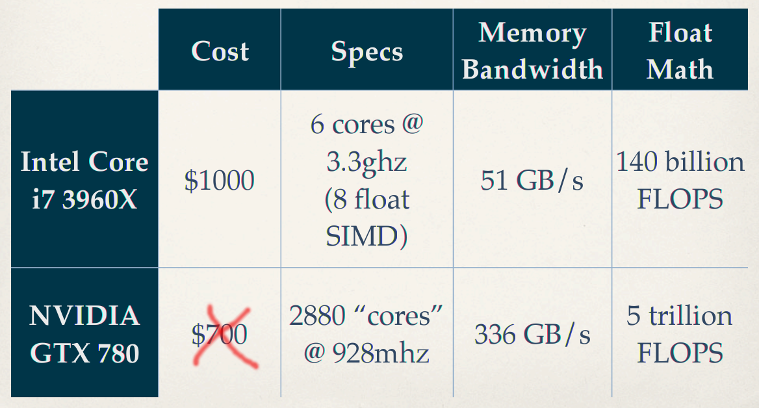

Need for Speed

... need for GPU programmers

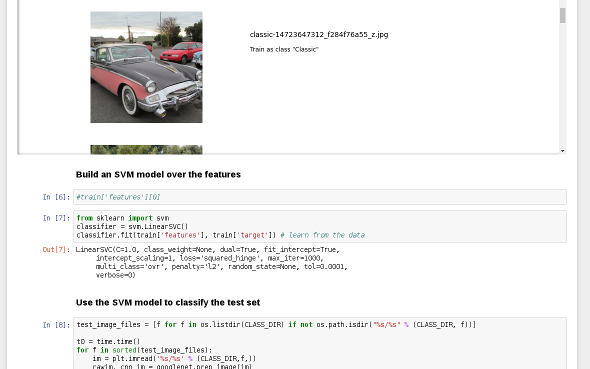

Using Pre-Built Networks

- Features learned for ImageNet are 'useful'

- Apply same network to 'featurized' new images

- Learn mapping from features to new classes

- Only have to train a single layer!

5-Commerce

Re-purpose a pretrained network

Abusing Pre-Built Networks

- Visual features can also be used generatively

- Alter images to maximise network response

- ...

- Art?

"Deep Dreams"

Careful! : Some images cannot be un-seen...

6-Visual-Art

Style-Transfer an artist onto your photos

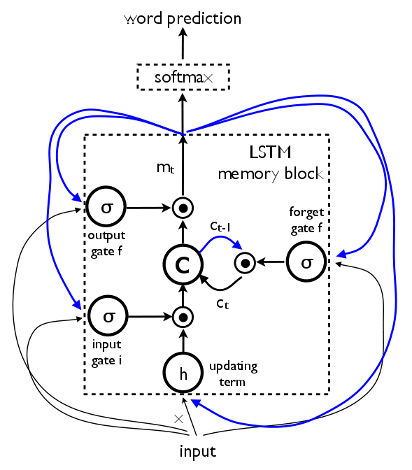

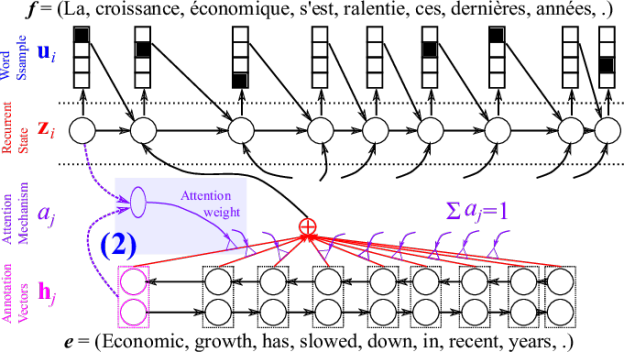

Language Processing?

Variable-length input doesn't "fit"

- Apply a network iteratively over input

- Internal state carried forward step-wise

- Everything is still differentiable

Recurrent Neural Networks

A Long Short-Term Memory (LSTM) Unit

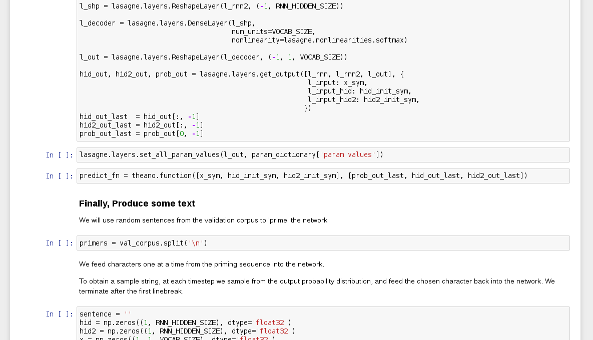

8-Natural-Language

Still a work-in-progress (training takes too long)

Poetry : Epoch 1

JDa&g#sdWI&MKW^gE)I}<UNK>f;6g)^5*|dXdBw6m\2&XcXVy\ph8G<gAM&>e4+mv5}OX8G*Yw9&n3XW{h@&T\Fk%BPMMI

OV&*C_] ._f$v4I~$@Z^&[2

mOVe`4W)"L-KClkO]wu]\$LCNadyo$h;>$jV7X$XK#4,T(y"sa6W0LWf\'_{\#XD]p%ck[;O`!Px\#E>/Or(.YZ|a]2}q|@a9.g3nV,U^qM $+:nlk0sd;V-Z&;7Y@Z "l-7P^C

"xBF~~{n} n\ Pcbc9f?=y)FIc1h5kvjIi

C<UNK>s DWJr_$ZQtu"BTYm'|SMj-]Z<Vqj*.lh%IYW|q.GK:eNI"r>833?+RuUsOj_)a{\T}gH.zZR^(daC3mg5P0iFi]bqGo4?T|\>0_H&g889voTh=~)^DDRYND46z1J]x;<U>>%eNIRckL)N8n<UNK>n3i)+Ln8

?)9.#s7X]}$*sxZ"3tf ")

@'HW.;I5)C.*%}<jcNLN+Z__RWoryOb#

/`r

Poetry : Epoch 100

Som the riscele his nreing the timest stordor hep pIs dach suedests her, so for farmauteds?

By arnouy ig wore

Thou hoasul dove he five grom ays he bare as bleen,

The seend,

And, an neeer,

Whis with the rauk with, for be collenss ore his son froven faredure:

Then andy bround'd the CowE nom shmlls everom thoy men ellone per in the lave ofpen the way ghiind, thour eyes in is ple gull heart sind, I I wild,

Frreasuce anspeve, wrom fant beiver, not the afan

And in thou' histwish a it wheme-tis lating ble the liveculd;

Noorroint he fhallought, othelts.

Poetry : Epoch 1000

AWhis grook my glass' to his sweet,

Bub my fears liken?

And of live every in seedher;

A Lood stall,

But tare tought than thencer sud earth,

Use'st bee sechion,

For all exprit' are a daud in heaven doth her infook perust the fork the tent.

For maud,

The pittent gover

This and rimp,

Who new

Thoir oldes and did hards, cound.

Plays : Epoch 338

Larger network...

DEDENIUS Why shoulmeying to to wife,

And thou say: and wall you teading for

that struke you down as sweet one.

With be more bornow, bly unjout on the account:

I duked you did four conlian unfortuned drausing-

to sicgia stranss, or not sleepplins his arms

Gentlemen? as write lord; gave sold.

AENEMUUNS Met that will knop unhian, where ever have

of the keep his jangst?icks he I love hide,

Jach heard which offen, sir!'

[Exit PATIIUS, MARGARUS arr [Enter CLOTHUR]

Fancier Tricks

Differentiable → Training : Even crazy stuff works!

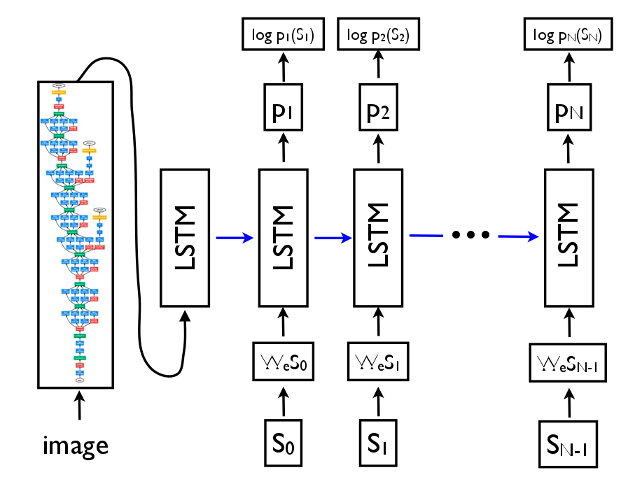

Image Labelling

We have the components now

Image Labels

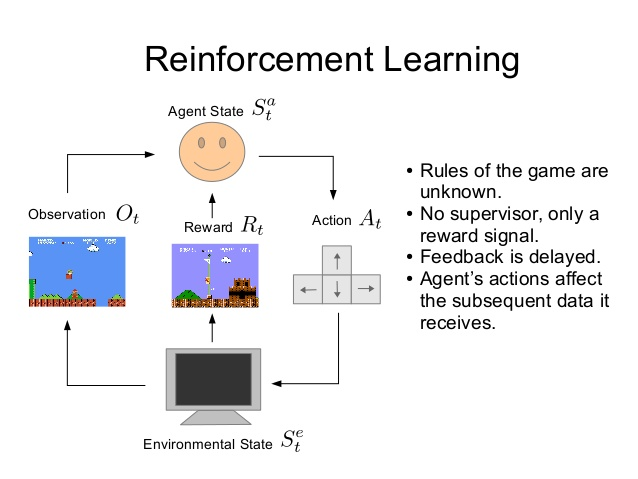

Reinforcement Learning

- Learning to choose actions ...

- ... which cause environment to change

Google DeepMind's AlphaGo

Useful beyond games...

- ... advertising

Agent Learning Set-Up

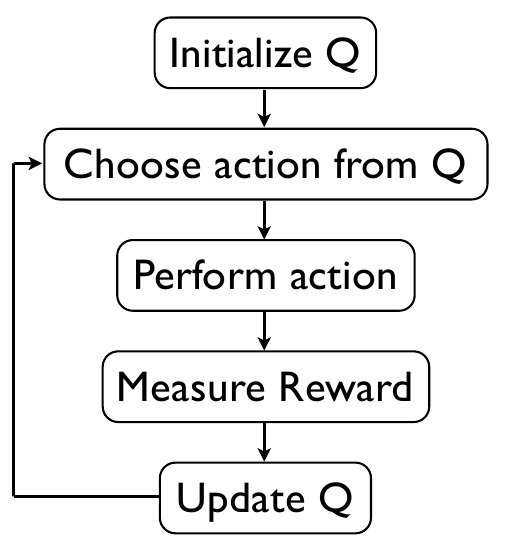

Q-Learning 1

- Estimate value of entire future from current state

- ... to estimate value of next state, for all possible actions

- Determine the 'best action' from estimates

Q-Learning 2

- ... do the best action

- Observe rewards, and new state

- * Update Q(now) to be closer to R+Q(next) *

Q-Learning Diagram

Q is a measure of what we think about the future

Deep Q-Learning

- Set Q() to be the output of a deep neural network

- ... where the input is the state

- Train network input/ouput pairs from observed steps

- ... over *many* games

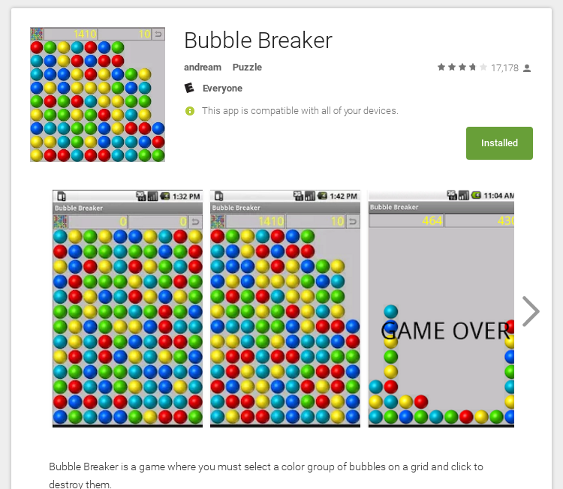

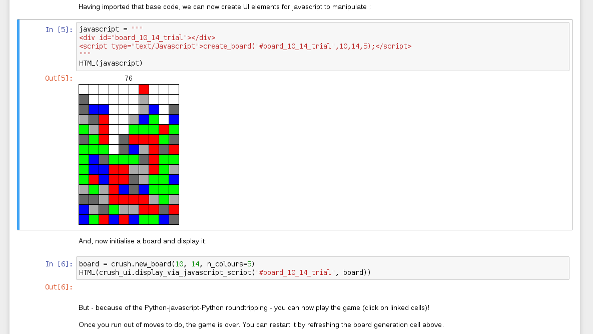

Today's Strategy Game

Classic game : But no bells-and-whistles

7-Reinforcement-Learning

Deep Reinforcement Learning for Bubble Breaker

AlphaGo Extras

- Monte-Carlo Tree Search

- Policy Network to hone search space

- Self-play

- ... and running on 1202 CPUs and 176 GPUs

Wrap-up

- Deep Learning may deserve some hype...

- Getting the tools in one place is helpful

- Having a GPU is VERY helpful

* Please add a star... *

- QUESTIONS -

Martin.Andrews @

RedCatLabs.com

My blog : http://mdda.net/

GitHub : mdda